Did you come to my blog looking for blog posts? Here they are, I guess. This is where I post traditional, long-form text that isn't primarily a link to someplace else, doesn't revolve around audiovisual media, and isn't published on any particular cadence. Just words about ideas and experiences.

TLDR is the best test runner for Claude Code

A couple years ago, Aaron and I had an idea for a satirical test runner that enforced fast feedback by giving up on running your tests after 1.8 seconds. It's called TLDR.

I kept pulling on the thread until TLDR could stand as a viable non-satirical test runner and a legitimate Minitest alternative. Its 1.0 release sported a robust CLI, configurable (and disable-able) timeouts, and a compatibility mode that makes TLDR a drop-in replacement for Minitest in most projects.

Anyway, as I got started working with Claude Code and learned about how hooks work, I realized that a test runner with a built-in concept of a timeout was suddenly a very appealing proposition. To make TLDR a great companion to agentic workflows, I put some work into a new release this weekend that allows you to do this:

tldr --timeout 0.1 --exit-0-on-timeout --exit-2-on-failure

The above command does several interesting things:

- Runs as many tests in random order and in parallel as it can in 100ms

- If some tests don't run inside 100ms, TLDR will exit cleanly (normally a timeout fails with exit code 3)

- If a test fails, the command fails with status code 2 (normally, failures exit code 1)

These three flags add up to a really interesting combination when you configure them as a Claude Code hook:

- A short timeout means you can add TLDR to run as an after-write hook for Claude Code without slowing you or Claude down very much

- By exiting with code 0 on a timeout, Claude Code will happily proceed so long as no tests fail. Because Claude Code tends to edit a lot of files relatively quickly, the hook will trigger many randomized test runs as Claude works—uncovering any broken tests reasonably quickly

- By exiting code 2 on test failures, Claude will—according to the docs—block Claude from proceeding until the tests are fixed

Here's an example Claude Code configuration you can drop into any project that uses TLDR. My .claude/settings.json file on todo_or_die looks like this:

{

"hooks": {

"PostToolUse": [

{

"matcher": "Edit|MultiEdit|Write",

"hooks": [

{

"type": "command",

"command": "bundle exec tldr --timeout 0.1 --exit-0-on-timeout --exit-2-on-failure"

}

]

}

]

}

}

If you maintain a linter or a test runner, you might want to consider exposing configuration for timeouts and exit codes in a similar way. I suspect demand for hook-aware CLI tools will become commonplace soon.

Notify your iPhone or Watch when Claude Code finishes

I taught Claude Code a new trick this weekend and thought others might appreciate it.

I have a very bad habit of staring at my computer screen while waiting for it to do stuff. My go-to solution for this is to make the computer do stuff faster, but there's no getting around it: Claude Code insists on taking an excruciating four or five minutes to accomplish a full day's work. Out of the box, claude rings the terminal bell when it stops out of focus, and that's good enough if you've got other stuff to do on your Mac. But because Claude is so capable running autonomously (that is, if you're brave enough to --dangerously-skip-permissions), that I wanted to be able to walk away from my Mac while it cooked.

This led me to cobble together this solution that will ping my iPhone and Apple Watch with a push notification whenever Claude needs my attention or runs out of work to do. Be warned: it requires paying for the Pro tier of an app called Pushcut, but anyone willing to pay $200/month for Claude Code can hopefully spare $2 more.

Here's how you can set this up for yourself:

- Install Pushcut to your iPhone and whatever other supported Apple devices you want to be notified on

- Create a new notification in the Notifications tab. I named mine "terminal". The title and text don't matter, because we'll be setting custom parameters each time when we POST to the HTTP webhook

- Copy your webhook secret from Pushcut's Account tab

- Set that webhook secret to an environment variable named

PUSHCUT_WEBHOOK_SECRETin your~/.profileor whatever - Save the shell script below

- Use this settings.json to configure Claude Code hooks

Of course, now I have a handy notify_pushcut executable I can call from any tool to get my attention, not just Claude Code. The script is fairly clever—it won't notify you while your terminal is focused and the display is awake. You'll only get buzzed if the display is asleep or you're in some other app. And if it's ever too much and you want to disable the behavior, just set a NOTIFY_PUSHCUT_SILENT variable.

The script

I put this file in ~/bin/notify_pushcut and made it executable with chmod +x ~/bin/notify_pushcut:

#!/usr/bin/env bash

set -e

# Doesn't source ~/.profile so load env vars ourselves

source ~/icloud-drive/dotfiles/.env

if [ -n "$NOTIFY_PUSHCUT_SILENT" ]; then

exit 0

fi

# Check if argument is provided

if [ $# -eq 0 ]; then

echo "Usage: $0 TITLE [DESCRIPTION]"

exit 1

fi

# Check if PUSHCUT_WEBHOOK_SECRET is set

if [ -z "$PUSHCUT_WEBHOOK_SECRET" ]; then

echo "Error: PUSHCUT_WEBHOOK_SECRET environment variable is not set"

exit 1

fi

# Function to check if Terminal is focused

is_terminal_focused() {

local frontmost_app=$(osascript -e 'tell application "System Events" to get name of first application process whose frontmost is true' 2>/dev/null)

# List of terminal applications to check

local terminal_apps=("Terminal" "iTerm2" "iTerm" "Alacritty" "kitty" "Warp" "Hyper" "WezTerm")

# Check if frontmost app is in the array

for app in "${terminal_apps[@]}"; do

if [[ "$frontmost_app" == "$app" ]]; then

return 0

fi

done

return 1

}

# Function to check if display is sleeping

is_display_sleeping() {

# Check if system is preventing display sleep (which means display is likely on)

local assertions=$(pmset -g assertions 2>/dev/null)

# If we can't get assertions, assume display is awake

if [ -z "$assertions" ]; then

return 1

fi

# Check if UserIsActive is 0 (user not active) and no prevent sleep assertions

if echo "$assertions" | grep -q "UserIsActive.*0" && \

! echo "$assertions" | grep -q "PreventUserIdleDisplaySleep.*1" && \

! echo "$assertions" | grep -q "Prevent sleep while display is on"; then

return 0 # Display is likely sleeping

fi

return 1 # Display is awake

}

# Set title and text

TITLE="$1"

TEXT="${2:-$1}" # If text is not provided, use title as text

# Only send notification if Terminal is NOT focused OR display is sleeping

if ! is_terminal_focused || is_display_sleeping; then

# Send notification to Pushcut - using printf to handle quotes properly

curl -s -X POST "https://api.pushcut.io/$PUSHCUT_WEBHOOK_SECRET/notifications/terminal" \

-H 'Content-Type: application/json' \

-d "$(printf '{"title":"%s","text":"%s"}' "${TITLE//\"/\\\"}" "${TEXT//\"/\\\"}")"

exit 0

fi

Claude hooks configuration

You can configure Claude hooks in ~/.claude/settings.json:

{

"hooks": {

"Notification": [

{

"hooks": [

{

"type": "command",

"command": "/bin/bash -c 'json=$(cat); message=$(echo \"$json\" | grep -o '\"message\"[[:space:]]*:[[:space:]]*\"[^\"]*\"' | sed 's/.*: *\"\\(.*\\)\"/\\1/'); $HOME/bin/notify_pushcut \"Claude Code\" \"${message:-Notification}\"'"

}

]

}

],

"Stop": [

{

"hooks": [

{

"type": "command",

"command": "$HOME/bin/notify_pushcut \"Claude Code Finished\" \"Claude has completed your task\""

}

]

}

]

}

}

Full-breadth Developers

The software industry is at an inflection point unlike anything in its brief history. Generative AI is all anyone can talk about. It has rendered entire product categories obsolete and upended the job market. With any economic change of this magnitude, there are bound to be winners and losers. So far, it sure looks like full-breadth developers—people with both technical and product capabilities—stand to gain as clear winners.

What makes me so sure? Because over the past few months, the engineers I know with a lick of product or business sense have been absolutely scorching through backlogs at a dizzying pace. It may not map to any particular splashy innovation or announcement, but everyone agrees generative coding tools crossed a significant capability threshold recently. It's what led me to write this. In just two days, I've completed two months worth of work on Posse Party.

I did it by providing an exacting vision for the app, by maintaining stringent technical standards, and by letting Claude Code do the rest. If you're able to cram critical thinking, good taste, and strong technical chops into a single brain, these tools hold the potential to unlock incredible productivity. But I don't see how it could scale to multiple people. If you were to split me into two separate humans—Product Justin and Programmer Justin—and ask them to work the same backlog, it would have taken weeks instead of days. The communication cost would simply be too high.

We can't all be winners

When I step back and look around, however, most of the companies and workers I see are currently on track to wind up as losers when all is said and done.

In recent decades, businesses have not only failed to cultivate full-breadth developers, they've trained a generation into believing product and engineering roles should be strictly segregated. To suggest a single person might drive both product design and technical execution would sound absurd to many people. Even for companies who realize inter-disciplinary developers are the new key to success, their outmoded job descriptions and salary bands are failing to recruit and retain them.

There is an urgency to this moment. Up until a few months ago, the best developers played the violin. Today, they play the orchestra.

Google screwed up

I've been obsessed with this issue my entire career, so pardon me if I betray any feelings of schadenfreude as I recount the following story.

I managed to pass a phone screen with Google in 2007 before graduating college. This earned me an all-expense paid trip for an in-person interview at the vaunted Googleplex. I went on to experience complete ego collapse as I utterly flunked their interview process. Among many deeply embarrassing memories of the trip was a group session with a Big Deal Engineer who was introduced as the inventor of BigTable. (Jeff Dean, probably? Unsure.) At some point he said, "one of the great things about Google is that engineering is one career path and product is its own totally separate career path."

I had just paid a premium to study computer science at a liberal arts school and had the audacity to want to use those non-technical skills, so I bristled at this comment. And, being constitutionally unable to keep my mouth shut, I raised my hand to ask, "but what if I play a hybrid class? What if I think it's critical for everyone to engage with both technology and product?"

The dude looked me dead in the eyes and told me I wasn't cut out for Google.

The recruiter broke a long awkward silence by walking us to the cafeteria for lunch. She suggested I try the ice cream sandwiches. I had lost my appetite for some reason.

In the years since, an increasing number of companies around the world have adopted Silicon Valley's trademark dual-ladder career system. Tech people sit over here. Idea guys go over there.

What separates people

Back to winners and losers.

Some have discarded everything they know in favor of an "AI first" workflow. Others decry generative AI as a fleeting boondoggle like crypto. It's caused me to broach the topic with trepidation—as if I were asking someone their politics. I've spent the last few months noodling over why it's so hard to guess how a programmer will feel about AI, because people's reactions seem to cut across roles and skill levels. What factors predict whether someone is an overzealous AI booster or a radicalized AI skeptic?

Then I was reminded of that day at Google. And I realized that developers I know who've embraced AI tend to be more creative, more results-oriented, and have good product taste. Meanwhile, AI dissenters are more likely to code for the sake of coding, expect to be handed crystal-clear requirements, or otherwise want the job to conform to a routine 9-to-5 grind. The former group feels unchained by these tools, whereas the latter group just as often feels threatened by them.

When I take stock of who is thriving and who is struggling right now, a person's willingness to play both sides of the ball has been the best predictor for success.

| Role | Engineer | Product | Full-breadth |

|---|---|---|---|

| Junior | ❌ | ❌ | ✅ |

| Senior | ❌ | ❌ | ✅ |

Breaking down the patterns that keep repeating as I talk to people about AI:

-

Junior engineers, as is often remarked, don't have a prayer of sufficiently evaluating the quality of an LLM's work. When the AI hallucinates or makes mistakes, novice programmers are more likely to learn the wrong thing than to spot the error. This would be less of a risk if they had the permission to decelerate to a snail's pace in order to learn everything as they go, but in this climate nobody has the patience. I've heard from a number of senior engineers that the overnight surge in junior developer productivity (as in "lines of code") has brought organization-wide productivity (as in "working software") to a halt—consumed with review and remediation of low-quality AI slop. This is but one factor contributing to the sense that lowering hiring standards was a mistake, so it's no wonder that juniors have been first on the chopping block

-

Senior engineers who earnestly adopt AI tools have no problem learning how to coax LLMs into generating "good enough" code at a much faster pace than they could ever write themselves. So, if they're adopting AI, what's the problem? The issue is that the productivity boon is becoming so great that companies won't need as many senior engineers as they once did. Agents work relentlessly, and tooling is converging on a vision of senior engineers as cattle ranchers, steering entire herds of AI agents. How is a highly-compensated programmer supposed to compete with a stable of agents that can produce an order of magnitude more code at an acceptable level of quality for a fraction of the price?

-

Junior product people are, in my experience, largely unable to translate amorphous real-world problems into well-considered software solutions. And communicating those solutions with the necessary precision to bring those solutions to life? Unlikely. Still, many are having success with app creation platforms that provide the necessary primitives and guardrails. But those tools always have a low capability ceiling (just as with any low-code/no-code platform). Regardless, is this even a role worth hiring? If I wanted mediocre product direction, I'd ask ChatGPT

-

Senior product people are among the most excited I've seen about coding agents—and why shouldn't they be? They're finally free of the tyranny of nerds telling them everything is impossible. And they're building stuff! Reddit is lousy with posts showing off half-baked apps built in half a day. Unfortunately, without routinely inspecting the underlying code, anything larger than a toy app is doomed to collapse under its own weight. The fact LLMs are so agreeable and unwilling to push back often collides with the blue-sky optimism of product people, which can result in each party leading the other in circles of irrational exuberance. Things may change in the future, but for now there's no way to build great software without also understanding how it works

Hybrid-class operators, meanwhile, seem to be having a great time regardless of their skill level or years experience. And that's because what differentiates full-stack developers is less about capability than about mindset. They're results-oriented: they may enjoy coding, but they like getting shit done even more. They're methodical: when they encounter a problem, they experiment and iterate until they arrive at a solution. The best among them are visionaries: they don't wait to be told what to work on, they identify opportunities others don't see, and they dream up software no one else has imagined.

Many are worried the market's rejection of junior developers portends a future in which today's senior engineers age out and there's no one left to replace them. I am less concerned, because less experienced full-breadth developers are navigating this environment extraordinarily well. Not only because they excitedly embraced the latest AI tools, but also because they exhibit the discipline to move slowly, understand, and critically assess the code these tools generate. The truth is computer science majors, apprenticeship programs, and code schools—today, all dead or dying—were never very effective at turning out competent software engineers. Claude Pro may not only be the best educational resource under $20, it may be the best way to learn how to code that's ever existed.

There is still hope

Maybe you've read this far and the message hasn't resonated. Maybe it's triggered fears or worries you've had about AI. Maybe I've put you on the defensive and you think I'm full of shit right now. In any case, whether your organization isn't designed for this new era or you don't yet identify as a full-breadth developer, this section is for you.

Leaders: go hire a good agency

While my goal here is to coin a silly phrase to help us better communicate about the transformation happening around us, we've actually had a word for full-breadth developers all along: consultant.

And not because consultants are geniuses or something. It's because, as I learned when I interviewed at Google, if a full-breadth developer wants to do their best work, they need to exist outside the organization and work on contract. So it's no surprise that some of my favorite full-breadth consultants are among AI's most ambitious adopters. Not because AI is what's trending, but because our disposition is perfectly suited to get the most out of these new tools. We're witnessing their potential to improve how the world builds software firsthand.

When founding our consultancy Test Double in 2011, Todd Kaufman and I told anyone who would listen that our differentiator—our whole thing—was that we were business consultants who could write software. Technology is just a means to an end, and that end (at least if you expect to be paid) is to generate business value. Even as we started winning contracts with VC-backed companies who seemed to have an infinite money spigot, we would never break ground until we understood how our work was going to make or save our clients money. And whenever the numbers didn't add up, we'd push back until the return on investment for hiring Test Double was clear.

So if you're a leader at a company who has been caught unprepared for this new era of software development, my best advice is to hire an agency of full-breadth developers to work alongside your engineers. Use those experiences to encourage your best people to start thinking like they do. Observe them at work and prepare to blow up your job descriptions, interview processes, and career paths. If you want your business to thrive in what is quickly becoming a far more competitive landscape, you may be best off hitting reset on your human organization and starting over. Get smaller, stay flatter, and only add structure after the dust settles and repeatable patterns emerge.

Developers: congrats on your new job

A lot of developers are feeling scared and hopeless about the changes being wrought by all this. Yes, AI is being used as an excuse by executives to lay people off and pad their margins. Yes, how foundation models were trained was unethical and probably also illegal. Yes, hustle bros are running around making bullshit claims. Yes, almost every party involved has a reason to make exaggerated claims about AI.

All of that can be true, and it still doesn't matter. Your job as you knew it is gone.

If you want to keep getting paid, you may have been told to, "move up the value chain." If that sounds ambiguous and unclear, I'll put it more plainly: figure out how your employer makes money and position your ass directly in-between the corporate bank account and your customers' credit card information. The longer the sentence needed to explain how your job makes money for your employer, the further down the value chain you are and the more worried you should be. There's no sugar-coating it: you're probably going to have to push yourself way outside your comfort zone.

Get serious about learning and using these new tools. You will, like me, recoil at first. You will find, if you haven't already, that all these fancy AI tools are really bad at replacing you. That they fuck up constantly. Your new job starts by figuring out how to harness their capabilities anyway. You will gradually learn how to extract something that approximates how you would have done it yourself. Once you get over that hump, the job becomes figuring out how to scale it up. Three weeks ago I was a Cursor skeptic. Today, I'm utterly exhausted working with Claude Code, because I can't write new requirements fast enough to keep up with parallel workers across multiple worktrees.

As for making yourself more valuable to your employer, I'm not telling you to demand a new job overnight. But if you look to your job description as a shield to protect you from work you don't want to do… stop. Make it the new minimum baseline of expectations you place on yourself. Go out of your way to surprise and delight others by taking on as much as you and your AI supercomputer can handle. Do so in the direction of however the business makes its money. Sit down and try to calculate the return on investment of your individual efforts, and don't slow down until that number far exceeds the fully-loaded cost you represent to your employer.

Start living these values in how you show up at work. Nobody is going to appreciate it if you rudely push back on every feature request with, "oh yeah? How's it going to make us money?" But your manager will appreciate your asking how you can make a bigger impact. And they probably wouldn't be mad if you were to document and celebrate the ROI wins you notch along the way. Listen to what the company's leadership identifies as the most pressing challenges facing the business and don't be afraid to volunteer to be part of the solution.

All of this would have been good career advice ten years ago. It's not rocket science, it's just deeply uncomfortable for a lot of people.

Good game, programmers

Part of me is already mourning the end of the previous era. Some topics I spent years blogging, speaking, and building tools around are no longer relevant. Others that I've been harping on for years—obsessively-structured code organization and ruthlessly-consistent design patterns—are suddenly more valuable than ever. I'm still sorting out what's worth holding onto and what I should put back on the shelf.

As a person, I really hate change. I wish things could just settle down and stand still for a while. Alas.

If this post elicited strong feelings, please e-mail me and I will respond. If you find my perspective on this stuff useful, you might enjoy my podcast, Breaking Change. 💜

A handy script for launching editors

Today, I want to share with you a handy edit script I use to launch my editor countless times each day. It can:

edit posse_party– will launch my editor with project~/code/searls/posse_partyedit -e vim rails/rails– will change to the~/code/rails/railsdirectory and runvimedit testdouble/mo[TAB]– will auto-complete toedit testdouble/mocktailedit emoruby– will, if not found locally, clone and open searls/emoruby

This script relies on following the convention of organizing working copies of projects in a GitHub <org>/<repo> format (under ~/code by default). I can override this and a few other things with environment variables:

CODE_DIR- defaults to"$HOME/code"DEFAULT_ORG- defaults to"searls"DEFAULT_EDITOR- defaults tocursor(for the moment)

I've been organizing my code like this for 15 years, but over the last year I've found myself bouncing between various AI tools so often that I finally bit the bullet to write a custom meta-launcher.

If you want something like this, you can do it yourself:

- Add the edit executable to a directory on your

PATH - Make sure

editis executable withchmod +x edit - Download the edit.bash bash completions and put them somewhere

- In .profile or

.bashrcor whatever, runsource path/to/edit.bash

The rest of this post is basically a longer-form documentation of the script that you're welcome to peruse in lieu of a proper README.

How to subscribe to email newsletters via RSS

I have exactly one inbox for reading blogs and following news, and it's expressly not my e-mail client—it's my feed reader. (Looking for a recommendation? Here are some instructions on setting up NetNewsWire; for once, the best app is also the free and open source one.)

Anyway, with the rise of Substack and the trend for writers to eschew traditional web publishing in favor of e-mail newsletters, more and more publishers want to tangle their content up in your e-mail. Newsletters work because people will see them (so long as they ever check their e-mail…), whereas routinely visiting a web site requires a level of discipline that social media trained out of most people a decade ago.

But, if you're like me, and you want to reserve your e-mail for bidirectional communication with humans and prefer to read news at the time and pace of your choosing, did you know you can convert just about any e-mail newsletter into an RSS feed and follow that instead?

Many of us nerds have known about this for a while, and while various services have tried to monetize the same feature, it's hard to beat Kill the Newsletter: it doesn't require an account to set up and it's totally free.

How to convert an e-mail newsletter into a feed

Suppose you're signed up to the present author's free monthly newsletter, Searls of Wisdom, and you want to start reading it in a feed reader. (Also suppose that I do not already publish an RSS feed alternative, which I do).

Here's what you can do:

-

Visit Kill the Newsletter and enter the human-readable title you want for the newsletter. In this case you might type Searls of Wisdom and and click

Create Feed. -

This yields two generated strings: an e-mail address and a feed URL

-

Copy the e-mail address (e.g.

1234@kill-the-newsletter.com) and subscribe to the newsletter via the publisher's web site, just as you would if you were giving them your real e-mail address -

Copy the URL (e.g.

https://kill-the-newsletter.com/feeds/1234.xml) and subscribe to it in your feed reader, as if it was any normal RSS/Atom feed -

Confirm it's working by checking the feed in your RSS reader. Because this approach simply recasts e-mails into RSS entries, the first thing you see will probably be a welcome message or a confirmation link you'll need to click to verify your subscription

-

Once it's working, if you'd previously subscribed to the newsletter with your personal e-mail address, unsubscribe from it and check it in your feed reader instead

That's it! Subscribing to a newsletter with a bogus-looking address so that a bogus-looking feeds starts spitting out articles is a little counter-intuitive, I admit, but I have faith in you.

(And remember, you don't need to do this for my newsletter, which already offers a feed you can just follow without the extra steps.)

Why is Kill the Newsletter free? How can any of this be sustainable? Nobody knows! Isn't the Internet cool?

Visiting Japan is easy because living in Japan is hard

Hat tip to Kyle Daigle for sending me this Instagram reel:

I don't scroll reels, so I'd hardly call myself a well-heeled critic of the form, but I will say I've never heard truer words spoken in a vertical short-form video.

It might be helpful to think of the harmony we witness in Japan as a collective bank account with an exceptionally high balance. Everyone deposits into that account all the ingredients necessary for maintaining a harmonious society. Withdrawals are rare, because to take anything out of that bank account effectively amounts to unilaterally deciding to spend everyone's money. As a result, acts of selfishness—especially those that disrupt that harmony—will frequently elicit shame and admonition from others.

Take trash, for example. Suppose the AA batteries in your Walkman die. There are few public trash cans, so:

-

If you're visiting Japan – at the next train platform, you'll see a garbage bin labeled "Others" and toss those batteries in there without a second thought

-

If you're living in Japan – you'll carry the batteries around all day, bring them home, sort and clean them, pay for a small trash bag for hazardous materials (taxed at 20x the rate of a typical bag), and then wait until the next hazardous waste collection day (which could be up to 3 months in some areas)

So which of these scenarios is more fun? Visiting, of course!

But what you don't see as a visitor is that nearly every public trash can is provided as a service to customers, and it's someone's literal job to go through each trash bag. So while the visitor experience above is relatively seamless, some little old lady might be tasked with sorting and disposing of the train station's trash every night. And when she finds your batteries, she won't just have to separate them from the rest of the trash, she may well have to fill out a form requisitioning a hazardous waste bag, or call the municipal garbage collection agency to schedule a pick-up. This is all in addition to the little old lady's other responsibilities—it doesn't take many instances of people failing to follow societal expectations to seriously stress the entire system.

This is why Japanese people are rightly concerned about over-tourism: foreigners rarely follow any of the norms that keep their society humming. Over the past 15 years, many tourist hotspots have reached the breaking point. Osaka and Kyoto just aren't the cities they once were. There just aren't the public funds and staffing available to keep up with the amount of daily disorder caused by tourists failing to abide by Japan's mostly-unspoken societal customs.

It's also why Japanese residents feel hopeless about the situation. The idea of foreign tourists learning and adhering to proper etiquette is facially absurd. Japan's economy is increasingly dependent on tourism dollars, so closing off the borders isn't feasible. The dominant political party lacks the creativity to imagine more aggressive policies than a hilariously paltry $3-a-night hotel taxes. Couple this with the ongoing depopulation crisis, and people quite reasonably worry that all the things that make Japan such a lovely place to visit are coming apart at the seams.

Anyway, for anyone who wonders why I tend to avoid the areas of Japan popular with foreigners, there you go.

The T-Shirts I Buy

I get asked from time to time about the t-shirts I wear every day, so I figured it might save time to document it here.

The correct answer to the question is, "whatever the cheapest blank tri-blend crew-neck is." The blend in question refers to a mix of fabrics: cotton, polyester, and rayon. The brand you buy doesn't really matter, since they're all going to be pretty much the same: cheap, lightweight, quick-drying, don't retain odors, and feel surprisingly good on the skin for the price. This type of shirt was popularized by the American Apparel Track Shirt, but that company went to shit at some point and I haven't bothered with any of its post-post-bankruptcy wares.

I maintain a roster of 7 active shirts that I rotate daily and wash weekly. Every 6 months I replace them. I buy 14 at a time so I only need to order annually. I always get them from Blank Apparel, because they don't print bullshit logos on anything and charge near-wholesale prices. I can usually load up on a year's worth of shirts for just over $100.

I can vouch for these two specific models:

The Next Level shirts feel slightly nicer on day one, but they also wear faster and will feel a little scratchy after three months of daily usage. The Bella+Canvas ones seem to hold up a bit better. But, honestly, who cares. The whole point is clothes don't matter and people will get used to anything after a couple days. They're cheap and cover my nipples, so mission accomplished.

These 4 Code Snippets won WWDC

WWDC 2025 delivered on the one thing I was hoping to see from WWDC 2024: free, unlimited invocation of Apple's on-device language models by developers. It may have arrived later than I would have liked, but all it took was the first few code examples from the Platforms State of the Union presentation to convince me that the wait was worth it.

Assuming you're too busy to be bothered to watch the keynote, much less the SOTU undercard presentation, here are the four bits of Swift that have me excited to break ground on a new LLM-powered iOS app:

@Generableand@Guideannotations#PlaygroundmacroLanguageModelSession's asyncstreamResponsefunctionToolinterface

The @Generable and @Guide annotations

Here's the first snippet:

@Generable

struct Landmark {

var name: String

var continent: Continent

var journalingIdea: String

}

@Generable

enum Continent {

case africa, asia, europe, northAmerica, oceania, southAmerica

}

let session = LanguageModelSession()

let response = try await session.respond(

to: "Generate a landmark for a tourist and a journaling suggestion",

generating: Landmark.self

)

You don't have to know Swift to see why this is cool: just tack @Generable onto any struct and you can tell the LanguageModelSession to return that type. No fussing with marshalling and unmarshalling JSON. No custom error handling for when the LLM populates a given value with an unexpected type. You simply declare the type, and it becomes the framework's job to figure out how to color inside the lines.

And if you want to make sure the LLM gets the spirit of a value as well as its basic type, you can prompt it on an attribute-by-attribute basis with @Guide, as shown here:

@Generable

struct Itinerary: Equatable {

let title: String

let destinationName: String

let description: String

@Guide (description: "An explanation of how the itinerary meets user's special requests.")

let rationale: String

@Guide(description: "A list of day-by-day plans.")

@Guide(.count(3))

let days: [DayPlan]

}

Thanks to @Guide, you can name your attributes whatever you want and separately document for the LLM what those names mean for the purpose of generating values.

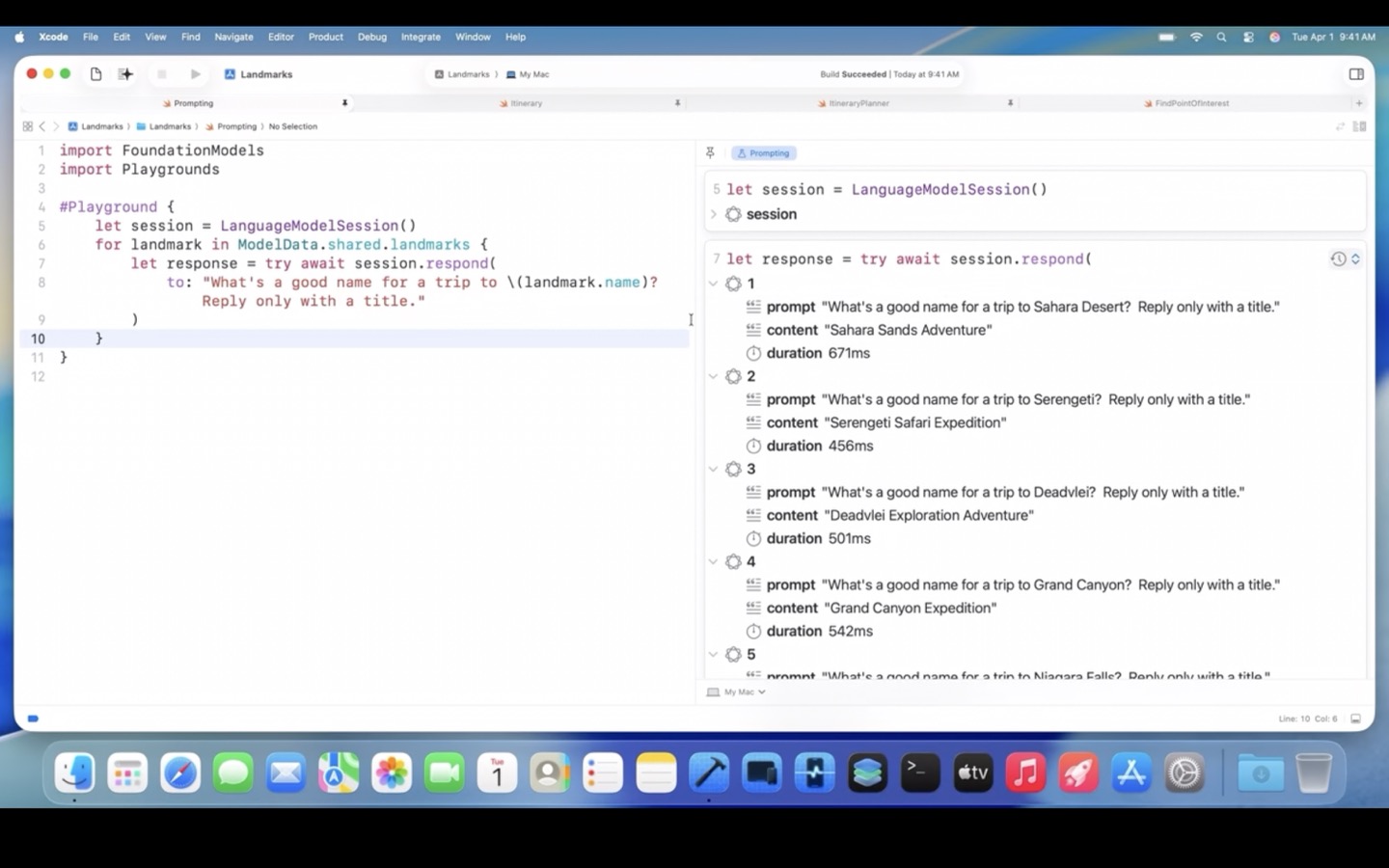

The #Playground macro

My ears perked up when the presenter Richard Wei said, "then I'm going to use the new playground macro in Xcode to preview my non-UI code." Because when I hear, "preview my non-UI code," my brain finishes the sentence with, "to get faster feedback." Seeing magic happen in your app's UI is great, but if going end-to-end to the UI is your only mechanism for getting any feedback from the system at all, forward progress will be unacceptably slow.

Automated tests are one way of getting faster feedback. Working in a REPL is another. Defining a #Playground inside a code listing is now a third tool in that toolbox.

Here's what it might look like:

#Playground {

let session = LanguageModelSession()

for landmark in ModelData.shared.landmarks {

let response = try await session.respond(

to: "What's a good name for a trip to \(landmark.name)?

Reply only with a title."

)

}

}

Which brings up a split view with an interactive set of LLM results, one for each landmark in the set of sample data:

Watch the presentation and skip ahead to 23:27 to see it in action.

Streaming user interfaces

Users were reasonably mesmerized when they first saw ChatGPT stream its textual responses as it plopped one word in front of another in real-time. In a world of loading screens and all-at-once responses, it was one of the reasons that the current crop of AI assistants immediately felt so life-like. ("The computer is typing—just like me!")

So, naturally, in addition to being able to await a big-bang respond request, Apple's new LanguageModelSession also provides an async streamResponse function, which looks like this:

let stream = session.streamResponse(generating: Itinerary.self) {

"Generate a \(dayCount)-day itinerary to \(landmark.name). Give it a fun title!"

}

for try await partialItinerary in stream {

itinerary = partialItinerary

}

The fascinating bit—and what sets this apart from mere text streaming—is that by simply re-assigning the itinerary to the streamed-in partialItinerary, the user interface is able to recompose complex views incrementally. So now, instead of some plain boring text streaming into a chat window, multiple complex UI elements can cohere before your eyes. Which UI elements? Whichever ones you've designed to be driven by the @Generable structs you've demanded the LLM provide. This is where it all comes together:

Scrub to 25:29 in the video and watch this in action (and then re-watch it in slow motion). As a web developer, I can only imagine how many dozens of hours of painstaking debugging it would take me to approximate this effect in JavaScript—only for it to still be hopelessly broken on slow devices and unreliable networks. If this API actually works as well as the demo suggests, then Apple's Foundation Models framework is seriously looking to cash some of the checks Apple wrote over a decade ago when it introduced Swift and more recently, SwiftUI.

The Tool interface

When the rumors were finally coalescing around the notion that Apple was going to allow developers to invoke its models on device, I was excited but skeptical. On device meant it would be free and work offline—both of which, great—but how would I handle cases where I needed to search the web or hit an API?

It didn't even occur to me that Apple would be ready to introduce something akin to Model Context Protocol (which Anthropic didn't even coin until last November!), much less the paradigm of the LLM as an agent calling upon a discrete set of tools able to do more than merely generate text and images.

And yet, that's exactly what they did! The Tool interface, in a slide:

public protocol Tool: Sendable {

associatedtype Arguments

var name: String { get }

var description: String { get }

func call(arguments: Arguments) async throws -> ToolOutput

}

And what a Tool that calls out to MapKit to search for points of interest might look like:

import FoundationModels

import MapKit

struct FindPointOfInterestTool: Tool {

let name = "findPointOfInterest"

let description = "Finds a point of interest for a landmark."

let landmark: Landmark

@Generable

enum Category: String, CaseIterable {

case restaurant

case campground

case hotel

case nationalMonument

}

@Generable

struct Arguments {

@Guide(description: "This is the type of destination to look up for.")

let pointOfInterest: Category

@Guide(description: "The natural language query of what to search for.")

let naturalLanguageQuery: String

}

func call(arguments: Arguments) async throws -> ToolOutput {}

private func findMapItems(nearby location: CLLocationCoordinate2D,

arguments: Arguments) async throws -> [MKMapItem] {}

}

And all it takes to pass that tool to a LanguageModelSession constructor:

self.session = LanguageModelSession(

tools: [FindPointOfInterestTool(landmark: landmark)]

)

That's it! The LLM can now reach for and invoke whatever Swift code you want.

Why this is exciting

I'm excited about this stuff, because—even though I was bummed out that none of this came last year—what Apple announced this week couldn't have been released a year ago, because basic concepts like agents invoking tools didn't exist a year ago. The ideas themselves needed more time in the oven. And because Apple bided its time, version one of its Foundation Models framework is looking like a pretty robust initial release and a great starting point from which to build a new app.

It's possible you skimmed this post and are nevertheless not excited. Maybe you follow AI stuff really closely and all of these APIs are old hat to you by now. That's a completely valid reaction. But the thing that's going on here that's significant is not that Apple put out an API that kinda sorta looks like the state of the art as of two or three months ago, it's that this API sits on top of a strongly-typed language and a reactive, declarative UI framework that can take full advantage of generative AI in a way web applications simply can't—at least not without a hobbled-together collection of unrelated dependencies and mountains of glue code.

Oh, and while every other app under the sun is trying to figure out how to reckon with the unbounded costs that come with "AI" translating to "call out to a hilariously-expensive API endpoints", all of Apple's stuff is completely free for developers. I know a lot of developers are pissed at Apple right now, but I can't think of another moment in time when Apple made such a compelling technical case for building on its platforms specifically and at the exclusion of cross-compiled, multi-platform toolkits like Electron or React Native.

And now, if you'll excuse me, I'm going to go install some betas and watch my unusually sunny disposition turn on a dime. 🤞

Why agents are bad pair programmers

LLM agents make bad pairs because they code faster than humans think.

I'll admit, I've had a lot of fun using GitHub Copilot's agent mode in VS Code this month. It's invigorating to watch it effortlessly write a working method on the first try. It's a relief when the agent unblocks me by reaching for a framework API I didn't even know existed. It's motivating to pair with someone even more tirelessly committed to my goal than I am.

In fact, pairing with top LLMs evokes many memories of pairing with top human programmers.

The worst memories.

Memories of my pair grabbing the keyboard and—in total and unhelpful silence—hammering out code faster than I could ever hope to read it. Memories of slowly, inevitably becoming disengaged after expending all my mental energy in a futile attempt to keep up. Memories of my pair hitting a roadblock and finally looking to me for help, only to catch me off guard and without a clue as to what had been going on in the preceding minutes, hours, or days. Memories of gradually realizing my pair had been building the wrong thing all along and then suddenly realizing the task now fell to me to remediate a boatload of incidental complexity in order to hit a deadline.

So yes, pairing with an AI agent can be uncannily similar to pairing with an expert programmer.

The path forward

What should we do instead? Two things:

- The same thing I did with human pair programmers who wanted to take the ball and run with it: I let them have it. In a perfect world, pairing might lead to a better solution, but there's no point in forcing it when both parties aren't bought in. Instead, I'd break the work down into discrete sub-components for my colleague to build independently. I would then review those pieces as pull requests. Translating that advice to LLM-based tools: give up on editor-based agentic pairing in favor of asynchronous workflows like GitHub's new Coding Agent, whose work you can also review via pull request

- Continue to practice pair-programming with your editor, but throttle down from the semi-autonomous "Agent" mode to the turn-based "Edit" or "Ask" modes. You'll go slower, and that's the point. Also, just like pairing with humans, try to establish a rigorously consistent workflow as opposed to only reaching for AI to troubleshoot. I've found that ping-pong pairing with an AI in Edit mode (where the LLM can propose individual edits but you must manually accept them) strikes the best balance between accelerated productivity and continuous quality control

Give people a few more months with agents and I think (hope) others will arrive at similar conclusions about their suitability as pair programmers. My advice to the AI tool-makers would be to introduce features to make pairing with an AI agent more qualitatively similar to pairing with a human. Agentic pair programmers are not inherently bad, but their lightning-fast speed has the unintended consequence of undercutting any opportunity for collaborating with us mere mortals. If an agent were designed to type at a slower pace, pause and discuss periodically, and frankly expect more of us as equal partners, that could make for a hell of a product offering.

Just imagining it now, any of these features would make agent-based pairing much more effective:

- Let users set how many lines-per-minute of code—or words-per-minute of prose—the agent outputs

- Allow users to pause the agent to ask a clarifying question or push back on its direction without derailing the entire activity or train of thought

- Expand beyond the chat metaphor by adding UI primitives that mirror the work to be done. Enable users to pin the current working session to a particular GitHub issue. Integrate a built-in to-do list to tick off before the feature is complete. That sort of thing

- Design agents to act with less self-confidence and more self-doubt. They should frequently stop to converse: validate why we're building this, solicit advice on the best approach, and express concern when we're going in the wrong direction

- Introduce advanced voice chat to better emulate human-to-human pairing, which would allow the user both to keep their eyes on the code (instead of darting back and forth between an editor and a chat sidebar) and to light up the parts of the brain that find mouth-words more engaging than text

Anyway, that's how I see it from where I'm sitting the morning of Friday, May 30th, 2025. Who knows where these tools will be in a week or month or year, but I'm fairly confident you could find worse advice on meeting this moment.

As always, if you have thoughts, e-mail 'em.

All the Pretty Prefectures

2025-06-13 Update: Miyazaki & Kochi & Tokushima, ✅ & ✅ & ✅!

2025-06-07 Update: Staying overnight in Nagasaki, which allows me to tighten up my rules: now only overnight stays in a prefecture count!

2025-06-06 Update: Saga, ✅!

2025-06-02 Update: Fukui, ✅!

2025-06-01 Update: Ibaraki, ✅!

2025-05-31 Update: Tochigi, ✅!

2025-05-29 Update: Fukushima, ✅!

2025-05-28 Update: Yamagata, 🥩!

2025-05-25 Update: Gunma, ✅!

2025-05-24 Update: We can check Saitama off the list.

So far, I've visited 46 of Japan's 47 prefectures.

I've been joking with my Japanese friends that I'm closing in on having visited every single prefecture for a little while now, and since I have a penchant for exaggerating, I was actually curious: how many have I actually been to?

Thankfully, because iPhone has been equipped with a GPS for so long, all my photos from our 2009 trip onward are location tagged, so I was pleased to find it was pretty easy to figure this out with Apple's Photos app.

Here are the ground rules:

- Pics or it didn't happen

- Have to stay the night in the prefecture (passing through in a car or train doesn't count)

- If I don't remember what I did or why I was there, it doesn't count

| 都道府県 | Prefecture | Visited? | 1st visit |

|---|---|---|---|

| 北海道 | Hokkaido | ❌ | |

| 青森県 | Aomori | ✅ | 2024 |

| 岩手県 | Iwate | ✅ | 2019 |

| 宮城県 | Miyagi | ✅ | 2019 |

| 秋田県 | Akita | ✅ | 2024 |

| 山形県 | Yamagata | ✅ | 2025 |

| 福島県 | Fukushima | ✅ | 2025 |

| 茨城県 | Ibaraki | ✅ | 2025 |

| 栃木県 | Tochigi | ✅ | 2025 |

| 群馬県 | Gunma | ✅ | 2025 |

| 埼玉県 | Saitama | ✅ | 2025 |

| 千葉県 | Chiba | ✅ | 2015 |

| 東京都 | Tokyo | ✅ | 2005 |

| 神奈川県 | Kanagawa | ✅ | 2005 |

| 新潟県 | Niigata | ✅ | 2024 |

| 富山県 | Toyama | ✅ | 2024 |

| 石川県 | Ishikawa | ✅ | 2023 |

| 福井県 | Fukui | ✅ | 2025 |

| 山梨県 | Yamanashi | ✅ | 2024 |

| 長野県 | Nagano | ✅ | 2009 |

| 岐阜県 | Gifu | ✅ | 2005 |

| 静岡県 | Shizuoka | ✅ | 2023 |

| 愛知県 | Aichi | ✅ | 2009 |

| 三重県 | Mie | ✅ | 2019 |

| 滋賀県 | Shiga | ✅ | 2005 |

| 京都府 | Kyoto | ✅ | 2005 |

| 大阪府 | Osaka | ✅ | 2005 |

| 兵庫県 | Hyogo | ✅ | 2005 |

| 奈良県 | Nara | ✅ | 2005 |

| 和歌山県 | Wakayama | ✅ | 2019 |

| 鳥取県 | Tottori | ✅ | 2024 |

| 島根県 | Shimane | ✅ | 2024 |

| 岡山県 | Okayama | ✅ | 2024 |

| 広島県 | Hiroshima | ✅ | 2012 |

| 山口県 | Yamaguchi | ✅ | 2019 |

| 徳島県 | Tokushima | ✅ | 2025 |

| 香川県 | Kagawa | ✅ | 2020 |

| 愛媛県 | Ehime | ✅ | 2024 |

| 高知県 | Kochi | ✅ | 2025 |

| 福岡県 | Fukuoka | ✅ | 2009 |

| 佐賀県 | Saga | ✅ | 2025 |

| 長崎県 | Nagasaki | ✅ | 2024 |

| 熊本県 | Kumamoto | ✅ | 2023 |

| 大分県 | Oita | ✅ | 2024 |

| 宮崎県 | Miyazaki | ✅ | 2025 |

| 鹿児島県 | Kagoshima | ✅ | 2023 |

| 沖縄県 | Okinawa | ✅ | 2024 |

Working through this list, I was surprised by how many places I'd visited for a day trip or passed through without so much as staying the night. (Apparently 11 years passed between my first Kobe trip and my first overnight stay?) This exercise also made it clear to me that having a mission like my 2024 Nihonkai Tour is a great way to string together multiple short visits while still retaining a strong impression of each place.

Anyway, now I've definitely got some ideas for where I ought to visit in 2025! 🌄

Ruby makes advanced CLI options easy

If you're not a "UNIX person", the thought of writing a command line application can be scary and off-putting. People find the command line so intimidating that Ruby—which was initially populated by a swarm of Windows-to-Mac migrants—now boasts a crowded field of gems that purport to make CLI development easier, often at the cost of fine-grained control over basic process management, limited option parsing, and increased dependency risk (many Rails upgrades have been stalled by a project's inclusion of another CLI gem that was built with an old version of thor).

Good news, you probably don't need any of those gems!

See, every UNIX-flavored language ships with its own built-in tools for building command line interfaces (after all, ruby itself is a CLI!), but I just wanted to take a minute to draw your attention to how capable Ruby's built-in OptionParser is in its own right. So long as you're writing a straightforward, conventional command line tool, it's hard to imagine needing much else.

This morning, I was working on me and Aaron's tldr test runner, and I was working on making the timeout configurable. What I wanted was a single flag that could do three things: (1) enable the timeout, (2) disable the timeout, and (3) set the timeout to some number of seconds. At first, I started implementing this as three separate options, but then I remembered that OptionParser is surprisingly adept at reading the arcane string format you might have seen in a CLI's man page or help output and "do the right thing" for you.

Here's a script to demo what I'm talking about:

#!/usr/bin/env ruby

require "optparse"

config = {}

OptionParser.new do |opts|

opts.banner = "Usage: timer_outer [options]"

opts.on "-t", "--[no-]timeout [TIMEOUT]", Numeric, "Timeout (in seconds) before timer aborts the run (Default: 1.8)" do |timeout|

config[:timeout] = if timeout.is_a?(Numeric)

# --timeout 42.3 / -t 42.3

timeout

elsif timeout.nil?

# --timeout / -t

1.8

elsif timeout == false

# --no-timeout / --no-t

-1

end

end

end.parse!(ARGV)

puts "Timeout: #{config[:timeout].inspect}"

And here's what you get when you run the script from the command line. What you'll find is that this single option packs in SEVEN permutations of flags users can specify (including not setting providing the option at all):

$ ruby timer_outer.rb --timeout 5.5

Timeout: 5.5

$ ruby timer_outer.rb --timeout

Timeout: 1.8

$ ruby timer_outer.rb --no-timeout

Timeout: -1

$ ruby timer_outer.rb -t 2

Timeout: 2

$ ruby timer_outer.rb -t

Timeout: 1.8

$ ruby timer_outer.rb --no-t

Timeout: -1

$ ruby timer_outer.rb

Timeout: nil

Moreover, using OptionParser will define a --help (and -h) option for you:

$ ruby timer_outer.rb --help

Usage: timer_outer [options]

-t, --[no-]timeout [TIMEOUT] Timeout (in seconds) before timer aborts the run (Default: 1.8)

What's going on here

The script above is hopefully readable, but this line is so dense it may be hard for you to (ugh) parse:

opts.on "-t", "--[no-]timeout [TIMEOUT]", Numeric, "Timeout …" do |timeout|

Let's break down each argument passed to OptionParser#on above:

"-t"is the short option name, which includes a single a single hyphen and a single character"--[no-]timeout [TIMEOUT]"does four things at once:- Specifies

--timeoutas the long option name, indicated by two hyphens and at least two characters - Adds an optional negation with

--[no-]timeout, which, when passed by the user, will pass a value offalseto the block - The dummy word

TIMEOUTsignals that users can pass a value after the flag (conventionally upper-cased to visually distinguish them from option names) - Indicates the

TIMEOUTvalue is optional by wrapping it in brackets as[TIMEOUT]

- Specifies

Numericis an ancestor ofFloatandIntegerand indicates thatOptionParsershould cast the user's input from a string to a number for you"Timeout…"is the description that will be printed by--helpdo |timeout|is a block that will be invoked every time this option is detected by the parser. The block'stimeoutargument will either be set to a numeric option value,nilwhen no option value is provided, orfalsewhen the flag is negated

If a user specifies multiple timeout flags above, OptionParser will parse it and invoke your block each time. That means in a conventional implementation like the one above, "last in wins":

$ ruby timer_outer.rb --timeout 3 --no-timeout

Timeout: -1

$ ruby timer_outer.rb --no-timeout --timeout 3

Timeout: 3

And this is just scratching the surface! There are an incredible number of CLI features available in modern Ruby, but the above has covered the vast majority of my use case for CLIs like standard and tldr.

For more on using OptionParser, check out the official docs and tutorial.

Programming is about mental stack management

The performance of large language models is, in part, constrained by the maximum size "context window" they support. In the early days, if you had a long-running chat with ChatGPT, you'd eventually exceed its context window and it would "forget" details from earlier in the conversation. Additionally, the quality of an LLM's responses will decrease if you fill that context window with anything but the most relevant information. If you've ever had to repeat or rephrase yourself in a series of replies to clarify what you want from ChatGPT, it will eventually be so anchored by the irrelevant girth of the preceding conversation that its "cognitive ability" will fall off a cliff and you'll never get the answer you're looking for. (This is why if you can't get the answer you want in one, you're better off editing the original message as opposed to replying.)

Fun fact: humans are basically the same. Harder problems demand higher mental capacity. When you can't hold everything in your head at once, you can't consider every what-if and, in turn, won't be able to preempt would-be issues. Multi-faceted tasks also require clear focus to doggedly pursue the problem that needs to be solved, as distractions will deplete one's cognitive ability.

These two traits—high mental capacity and clear focus—are essential to solving all but the most trivial computer programming tasks. The ability to maintain a large "mental stack" and the discipline to move seamlessly up and down that stack without succumbing to distraction are hallmarks of many great programmers.

Why is this? Because accomplishing even pedestrian tasks with computers can take us on lengthy, circuitous journeys rife with roadblocks and nonsensical solutions. I found myself down an unusually deep rabbit hole this week—so much so that I actually took the time to explain to Becky what yak shaving is, as well as the concept of pushing dependent concepts onto a stack, only to pop them off later.

The conversation was a good reminder that, despite being fundamental to everyday programming, almost nobody talks about this phenomenon—neither the value of mastering it as a skill or its potential impact on one's stress level and mood. (Perhaps that's because, once you've had this insight, there's just not much reason to discuss it in depth, apart from referencing it with shorthand like "yak shaving".) So, I figured it might be worth taking the time to write about this "mental stack management", using this week's frustrations as an example with which to illustrate what I'm talking about. If you're not a programmer and you're curious what it's like in practice, you might find this illuminating. If you are a programmer, perhaps you'll find some commiseration.

Here goes.

Why it takes me a fucking week to get anything done

The story starts when I set out to implement a simple HTTP route in POSSE Party that would return a JSON object mapping each post it syndicates from this blog to the permalinks of all the social media posts it creates. Seems easy, right?

Here's how it actually went. Each list item below represents a problem I pushed onto my mental stack in the course of working on this:

- Implement the feature in 5 minutes and 10 lines of code

- Consider that, as my first public-facing route that hits the database, I should probably cache the response

- Decide to reuse the caching API I made for Better with Becky

- Extract the caching functionality into a new gem

- Realize an innocuous Minitest update broke the m runner again, preventing me from running individual tests quickly

- Switch from Minitest to the TLDR test runner I built with Aaron last year

- Watch TLDR immediately blow up, because Ruby 3.4's Prism parser breaks its line-based CLI runner (e.g.

tldr my_test.rb:14) - Learn why it is much harder to determine method locations under Prism than it was with parse.y

- Fix TLDR, only to find that super_diff is generating warnings that will definitely impact TLDR users

- Clone super_diff's codebase, get its tests running, and reproduce the issue in a test case

- Attempt to fix the warning, but notice it'd require switching to an older way to forward arguments (from

...to*args, **kwargs, &block), which didn't smell right - Search the exact text of the warning and find that it wasn't indicative of a real problem, but rather a bug in Ruby itself

- Install Ruby 3.4.2 in order to re-run my tests and see whether it cleared the warnings super_diff was generating

At this point, I was thirteen levels deep in this stack and it was straining my mental capacity. I ran into Becky in the hallway and was unnecessarily short with her, because my mind was struggling to keep all of this context in my working memory. It's now day three of this shit. When I woke up this morning, I knew I had to fix an issue in TLDR, but it took me a few minutes to even remember what the fuck I needed it for.

And now, hours later, here I am working in reverse as I pop each problem off the stack: closing tabs, resolving GitHub issues, publishing a TLDR release. (If you're keeping score, that puts me at level 5 of the above stack, about to pop up to level 3 and finally get to work on this caching library.) I needed a break, so I went for my daily jog to clear my head.

During my run, a thought occurred to me. You know, I don't even want POSSE Party to offer this feature.

Well, fuck.

Announcing Merge Commits, my all-new podcast (sort of)

Okay, so hear me out. Last year, I started my first podcast: Breaking Change. It's a solo project that runs biweekly-ish with each episode running 2–3 hours. It's a low-stakes discussion meant to be digested in chunks—while you're in transit, doing chores, walking the dog, or trying to fall asleep. It covers the full gamut of my life and interests—from getting mad at technology in my personal life, to getting mad at technology in my work, to getting mad at technology during leisure activities. In its first 15 months, I've recorded 33 episodes and I'm approaching an impressive-sounding 100 hours of monologue content.

Today, I launched a more traditional, multi-human interview podcast… and dropped 36 fucking episodes on day one. It's called Merge Commits. Add them up, and that's over 35 hours of Searls-flavored content. You can subscribe via this dingus here:

Wait, go back to the part about already having 36 episodes, you might be thinking/screaming.

Well, the thing is, with one exception, none of these interviews are actually new. And I'm not the host of the show—I'm always the one being interviewed. See, since the first time someone asked me on their podcast (which appears to have been in June 2012), I've always made a habit of saving them for posterity. Over the past couple of days, I've worked through all 36 interviews I could find and pulled together the images and metadata needed to publish them as a standalone podcast.

Put another way: Merge Commits is a meta-feed of every episode of someone else's podcast where I'm the guest. Each show is like a git merge commit and only exists to connect the outside world to the Breaking Change cinematic universe. By all means, if you enjoy an interview, follow the link in the show notes and subscribe to the host's show! And if you have a podcast of your own and think I'd make a good guest, please let me know!

Look—like a lot of the shit I do—I've never heard of anyone else doing something like this. I know it's weird, but as most of these podcasts are now years out of production, I just wanted to be sure they wouldn't be completely lost to the sands of time. And, as I've recently discussed in Searls of Wisdom, I'm always eager to buttress my intellectual legacy.

This Vision Pro strap is totally globular!

Who the fuck knows what a "globular cluster" is, but the Globular Cluster CMA1 is my new recommendation for Best Way to Wear Vision Pro. It replaces a lightly-modified BOBOVR M2 as the reining champ, primarily due to the fact it's a thing you can just buy on Amazon and slap on your face. It's slightly lighter, too. One downside: it places a wee bit more weight up front. I genuinely forget I'm wearing the BOBOVR M2 and I never quite forget I'm wearing this one.

Here's a picture. You can't tell, but I'm actually winking at you.

Also pictured, I've started wearing a cycling skull cap when I work with Vision Pro to prevent the spread of my ethnic greases onto the cushions themselves. By regularly washing the cap, I don't have to worry about having an acne breakout as a 40-year-old man. An ounce of prevention and all that.

You might be asking, "where's the Light Seal?" Well, it turns out if you're wearing this thing for more than a couple hours, having peripheral vision and feeling airflow over your skin is quite nice. Besides, all the cool kids are doing it. Going "open face" requires an alternative to Apple's official straps, of course, because Apple would prefer to give your cheek bones a workout as gravity leaves its mark on your upper-jowl region.

You might also be wondering, "does he realize he looks ridiculous?" All I can say is that being totally shameless and not caring what the fuck anyone thinks is always a great look.

The 12" MacBook was announced 10 years ago

On March 9, 2015, Apple announced a redesigned MacBook, notable for a few incredible things:

- 2 pounds (the lightest computer Apple currently sells is 35% heavier at 2.7 pounds)

- 13.1mm thin

- A 12-inch retina screen (something the MacBook Air wouldn't receive until late 2018)

- The Force Touch trackpad (which came to the MacBook Pro line the same day)

It also became infamous for a few less-than-incredible things:

- A single port for charging and data transfer, heralding the dawn of the Dongle Era

- That port was USB-C, which most people hadn't even heard of, and which approximately zero devices supported

- A woefully-underpowered 5-watt TDP Intel chip

- The inadvisably-thin butterfly keyboard, which would go on to hobble Apple's entire notebook line for 5 years (though my MacBooks never experienced any of the issues I had with later MacBooks Pro)

Still, the 2015 MacBook (and the 2016 and 2017 revisions Apple would go on to release) was, without-a-doubt, my favorite computer ever. When I needed power, I had uncompromised power on my desktop. When I needed portability, I had uncompromised portability in my bag.

It was maybe Phil Schiller's best pitch for a new Mac, too. Here's the keynote video, scrubbed to the MacBook part:

Literally the worst thing about traveling with the 12" MacBook was that I'd frequently panic—oh shit, did I forget my computer back there?—when in fact I had just failed to detect its svelte 2-pound presence in my bag. I lost track of how many times I stopped in traffic and rushed to search for it, only to calm down once I confirmed it was still in my possession.

I started carrying it in this ridiculous-looking 12-liter Osprey pack, because it was the only bag I owned that was itself light enough for me to feel the weight of the computer:

This strategy backfired when I carelessly left the bag (and computer) on the trunk of our car, only for Becky to drive away without noticing it (probably because it was barely taller than the car's spoiler), making the 12" MacBook the first computer I ever lost. Restoring my backup to its one-port replacement was a hilarious misadventure in retrying repeatedly until the process completed before the battery gave out.

I have many fond memories programming in the backyard using the MacBook as a remote client to my much more powerful desktop over SSH, even though bright sunlight on a cool day was all it took to discover Apple had invented a new modal overheating screen just for the device.

Anyway, ever since the line was discontinued in 2019, I've been waiting for Apple to release another ultraportable, and… six years later, I'm still waiting. The 11-inch MacBook Air was discontinued in late 2016, meaning that if your priority is portability, the 13" MacBook Air is the best they can offer you. Apple doesn't even sell an iPad and keyboard accessory that, in combination, weigh less than 2.3 pounds. Their current lineup of portable computers are just nowhere near light enough.

More than the raw numbers numbers, none of Apple's recent Macs have sparked the same joy in me that the 11" Air and 12" MacBook did. Throwing either of those in a bag had functionally zero cost. No thicker than a magazine. Lighter than a liter of water. Today, when I put a MacBook Air in my bag, it's because I am affirmatively choosing to take a computer with me. In 2015, I would regularly leave my MacBook in my bag even when I didn't expect to need it, and often because I was too lazy to take it out between trips. That is the benchmark for portable computing, and Apple simply doesn't deliver it anymore. Hopefully that will change someday.

How to run Claude Code against a free local model

UPDATE: Turns out this relied on code that (according to Anthropic) wasn't released under an license that permitted its distribution and the project has been issued a DMCA Takedown. Go ahead and disregard this post. Use aider or opencode instead.

Last night, Aaron shared the week-old Claude Code demo, and I was pretty blown away by it:

I've tried the "agentic" features of some editors (like Cursor's "YOLO" mode) and have been woefully disappointed by how shitty the UX always is. They often break on basic focus changes, hang at random, and frequently require fussy user intervention with a GUI. Claude Code, however, is a simple REPL, which is all I've ever really wanted from a coding assistant. Specifically, I want to be able to write a test in my editor and then tell a CLI to go implement code to pass the test and let it churn as long as it needs.

Of course, I didn't want to actually try Claude Code, because it would have required a massive amount of expensive API tokens to accomplish anything, and I'm a cheapskate who doesn't want to have to pay someone to perform mundane coding tasks. Fortunately, it took five minutes to find an LLM-agnostic fork of Claude Code called Anon Kode and another five minutes to contribute a patch to make it work with a locally-hosted LLM server.

Thirty minutes later and I have a totally-free, locally-hosted version of the Claude Code experience demonstrated in the video above working on my machine (an MacBook Pro with M4 Pro and 48GB of RAM). I figured other people would like to try this too, so here are step-by-step instructions. All you need is an app called LM Studio and Anon Kode's kode CLI.

Running a locally-hosted server with LM Studio

Because Anon Kode needs to make API calls to a server that conforms to the Open AI API, I'm using LM Studio to install models and run that server for me.

- Download LM Studio

- When the onboarding UI appears, I recommend unchecking the option to automatically start the server at login

- After onboarding, click the search icon (or hit Command-Shift-M) and install an appropriate model (I started with "Qwen2.5 Coder 14B", as it can fit comfortably in 48GB)

- Once downloaded, click the "My Models" icon in the sidebar (Command-3), then click the settings gear button and set the context length to

8192(this is Anon Kode's default token limit and it currently doesn't seem to respect other values, so increasing the token limit in LM Studio to match is the easiest workaround) - Click the "Developer" icon in the sidebar (Command-2), then in the top center of the window, click "Select a model to load" (Command-L) and choose whatever model you just installed

- Run the server (Command-R) by toggling the control in the upper left of the Developer view

- In the right sidebar, you should see an "API Usage" pane with a local server URL. Mine is (and I presume yours will be) http://127.0.0.1:1234

Configuring Anon Kode

Since Claude Code is a command-line tool, getting this running will require basic competency with your terminal:

- First up, you'll need Node.js (or an equivalent runtime) installed. I use homebrew and nodenv to manage my Node installation(s)

- Install Anon Kode (

npm i -g anon-kode) - In your terminal, change into your project directory (e.g.

cd ~/code/searls/posse_party/) - Run

kode - Use your keyboard to go through its initial setup. Once prompted to choose between "Large Model" and "Small Model" selections, hit escape to exit the wizard, since it doesn't support specifying custom server URLs

- When asked if you trust the files in this folder (assuming you're in the right project directory), select "Yes, proceed"

- You should see a prompt. Type

/configand hit enter to open the configuration panel, using the arrow keys to navigate and enter to confirm- AI Provider: toggle to "custom" by hitting enter

- Small model name:" to "LM Studio" or similar

- Small model base URL:

http://127.0.0.1:1234/v1(or whatever URL LM Studio reported when you started your server) - API key for small model: provide any string you like, it just needs to be set (e.g. "NA")

- Large model name: to "LM Studio" or similar

- API key for large model: again, enter whatever you want

- Large model base URL:

http://127.0.0.1:1234/v1 - Press escape to exit

- Setting a custom base URL resulted in Anon Kode failing to append

v1to the path of its requests to LM Studio until I restarted it (If this happens to you, press Ctrl-C twice and runkodeagain) - Try asking it to do stuff and see what happens!

That's it! Now what?

Is running a bootleg version of Claude Code useful? Is Claude Code itself useful? I don't know!

I am hardly a master of running LLM locally, but the steps above at least got things working end-to-end so I can start trying different models and tweaking their configuration. If you try this out and have landed on a configuration that works really well for you, let me know!

Calling private methods without losing sleep at night

Today, I'm going to show you a simple way to commit crimes with a clean conscience.

First, two things I strive for when writing software:

- Making my code do the right thing, even when it requires doing the wrong thing

- Finding out that my shit is broken before all my sins are exposed in production

Today, I was working on a custom wrapper of Rails' built-in static file server, and deemed that it'd be wiser to rely on its internal logic for mapping URL paths (e.g. /index.html) to file paths (e.g. ~/app/public/index.html) than to reinvent that wheel myself.

The only problem? The method I need, ActionDispatch::FileHandler#find_file is private, meaning that I really "shouldn't" be calling it. But also, it's a free country, so whatever. I wrote this and it worked:

filepath, _ = @file_handler.send(:find_file,

request.path_info, accept_encoding: request.accept_encoding)

If you don't know Ruby, send is a sneaky backdoor way of calling private methods. Encountering send is almost always a red flag that the code is violating the intent of whatever is being invoked. It also means the code carries the risk that it will quietly break someday. Because I'm calling a private API, no one on the Rails team will cry for me when this stops working.

So, anyway, I got my thing working and I felt supremely victorious… for 10 whole seconds. Then, the doubts crept in. "Hmm, I'm gonna really be fucked if 3 years from now Rails changes this method signature." After 30 seconds hemming and hawing over whether I should inline the functionality and preemptively take ownership of it—which would separately run the risk of missing out on any improvements or security fixes Rails makes down the road—I remembered the answer:

I can solve this by codifying my assumptions at boot-time.

A little thing I tend to do whenever I make a dangerous assumption is to find a way to pull forward the risk of that assumption being violated as early as possible. It's one reason I first made a name for myself in automated testing—if the tests fail, the code doesn't deploy, and nothing breaks. Of course, I could write a test to ensure this method still works, but I didn't want to give this method even more of my time. So instead, I codified this assumption in an initializer:

# config/initializers/invariant_assumptions.rb

Rails.application.config.after_initialize do

next if Rails.env.production?

# Used by lib/middleware/conditional_get_file_handler.rb

unless ActionDispatch::FileHandler.instance_method(:find_file).parameters == [[:req, :path_info], [:keyreq, :accept_encoding]]

raise "Our assumptions about a private method call we're making to ActionDispatch::FileHandler have been violated! Bailing."

end

end

Now, if I update Rails and try to launch my dev server or run my tests, everything will fail immediately if my assumptions are violated. If a future version of Rails changes this method's signature, this blows up. And every time I engage in risky business in the future, I can just add a stanza to this initializer. My own bespoke early warning system.

Writing this note took 20 times longer than the fix itself, by the way. The things I do for you people.

Turning your audio podcast into a video-first production

I was chatting with Adam Stacoviak over at Changelog a couple weeks back, and he mentioned that this year they've taken their podcast "video-first" via their YouTube channel.

I hadn't heard the phrase "video-first" before, but I could imagine he meant, "you record the show for video—which is more complex than recording audio alone—and then the audio is a downstream artifact from that video production." Of course, key to my personal brand is failing to demonstrate curiosity in the moment by simply asking Adam what he does, and instead going on an individual two-week-long spirit quest to invent all the wheels myself based on the possibly incorrect assumption of what he meant in the first place.

Anyway, as of v31 of Breaking Change, my podcast is now, apparently, a video-first production. I figured I'd share my notes on the initial changes to the workflow, along with links to the products I'm using.

Here's the video:

And here's the extremely simple and easy 10 step process that got me there (with affiliate links throughout):

- Record audio and video in OBS

- Video is recorded in 4k@60fps in 8-bit HEVC as an MKV file (because MKV files, unlike MOV, can be interrupted by a crash without losing the entire recording)