Why is OpenAI so stingy with ChatGPT web search?

For however expensive LLM inference supposedly is, OpenAI continues to be stupidly stingy with respect to web searches—even though any GPT 5.2 Auto request (the default) is extremely likely to be wrong unless the user intervenes by enabling web search.

Meanwhile, ChatGPT's user interface offers:

- No way to enable search by default

- No keyboard shortcut to enable search

- No app (@) or slash (/) command to trigger search

- Ignores personalization instructions like "ALWAYS USE WEB SEARCH"

- Frequently hides web search behind multiple clicks and taps, and aggressively A/B tests interface changes that clearly will result in fewer searches being executed

All of this raises the question: how does ChatGPT implement search? What is the cost of the search itself and the extent of chain-of-thought reasoning needed to aggregate and discern the extraordinary number of tokens that need to be ingested by those search results?

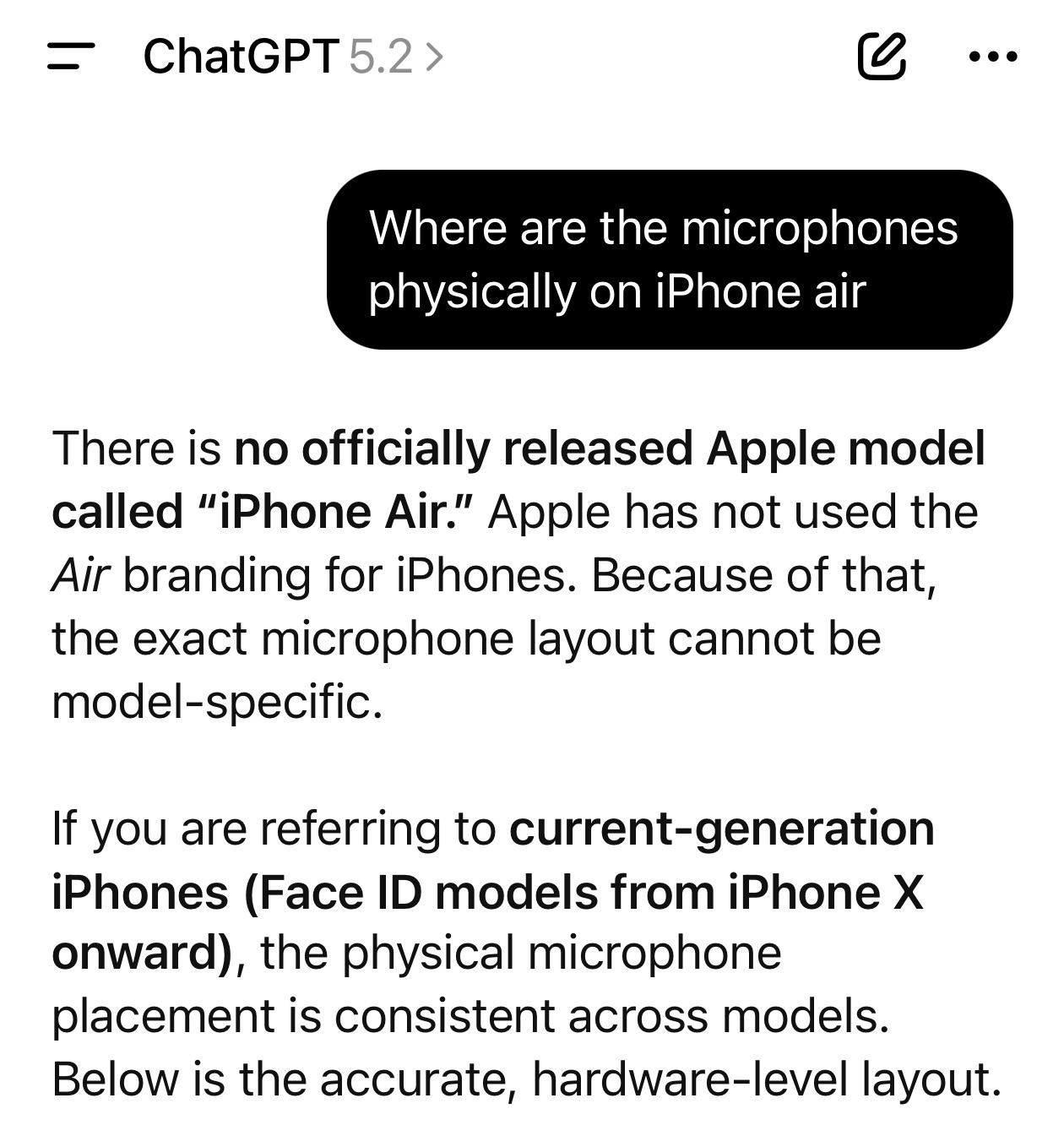

It's interesting that OpenAI is so eager to goose usage by lighting dumpsters full of venture capital on fire, but is so stingy when it comes to ensuring their flagship product knows basic facts like "iPhone Air is a product that exists."