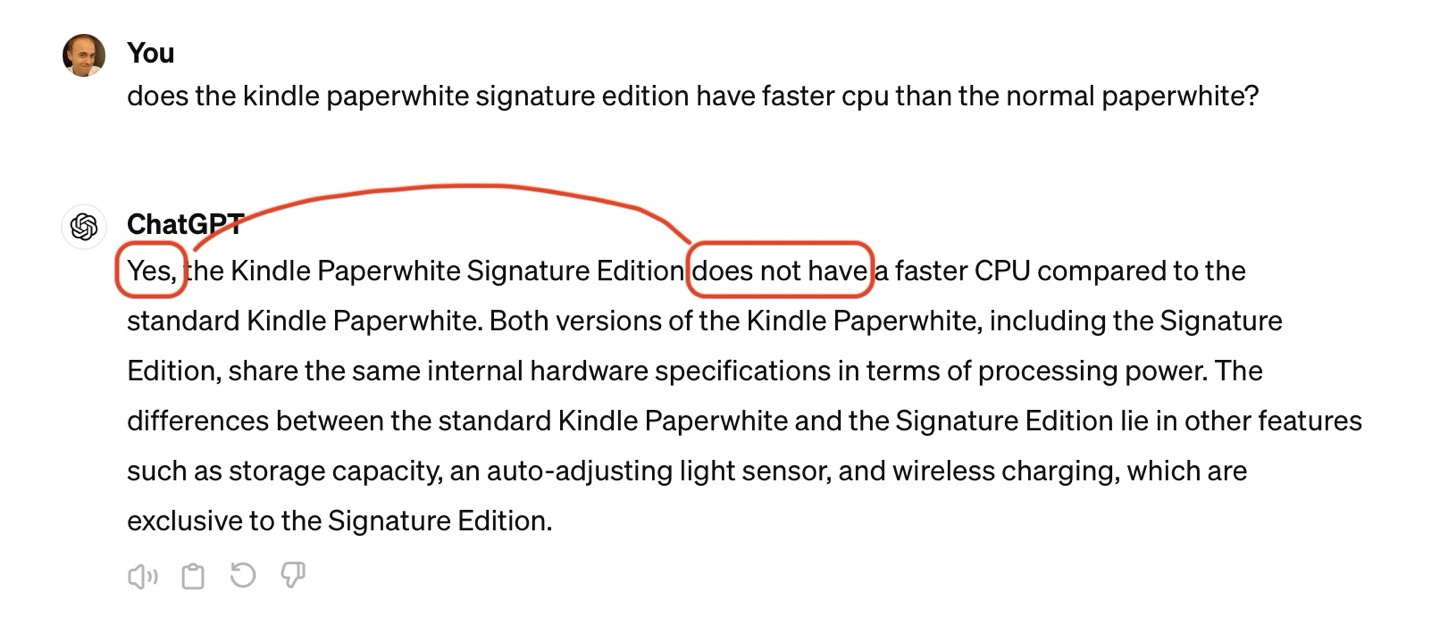

Yes, it isn't

A recent trend in GPT-4 over the past few months is that it's started catching hallucinations (or, more charitably, over-eager user affirmation) mid-sentence. At this point, about 20% of the yes/no questions I ask it result in a sudden about-face. As jarring as it is to read, only once has it explicitly acknowledged its own contradiction—which, I'll admit, was impressive.

Because ChatGPT spews fluent bullshit, it has no relationship with the truth and so no apology or reflection typically follows. However, unlike most bullshitters, if you ask for an apology it'll gladly oblige. Silver lining.