Meta's new AI chat sucks at coding

Yesterday, Zuck got on stage to announce Meta's ChatGPT killer, Llama 3, apparently making this bold claim:

Meta says that Llama 3 outperforms competing models of its class on key benchmarks and that it's better across the board at tasks like coding

Coding? You sure about that?

I've been pairing with ChatGPT (using GPT-4) every day for the last few months and it is demonstrably terrible 80% of the time, but 20% of the time it saves me an hour of headaches, so I put up with it anyway. Nevertheless, my experience with Llama 2 was so miserable, I figured Zuck's claim about Llama 3 outperforming GPT-4 was bullshit, so I put it to the test this morning.

TLDR: I asked three questions and Llama 3 whiffed. Badly.

Question 1

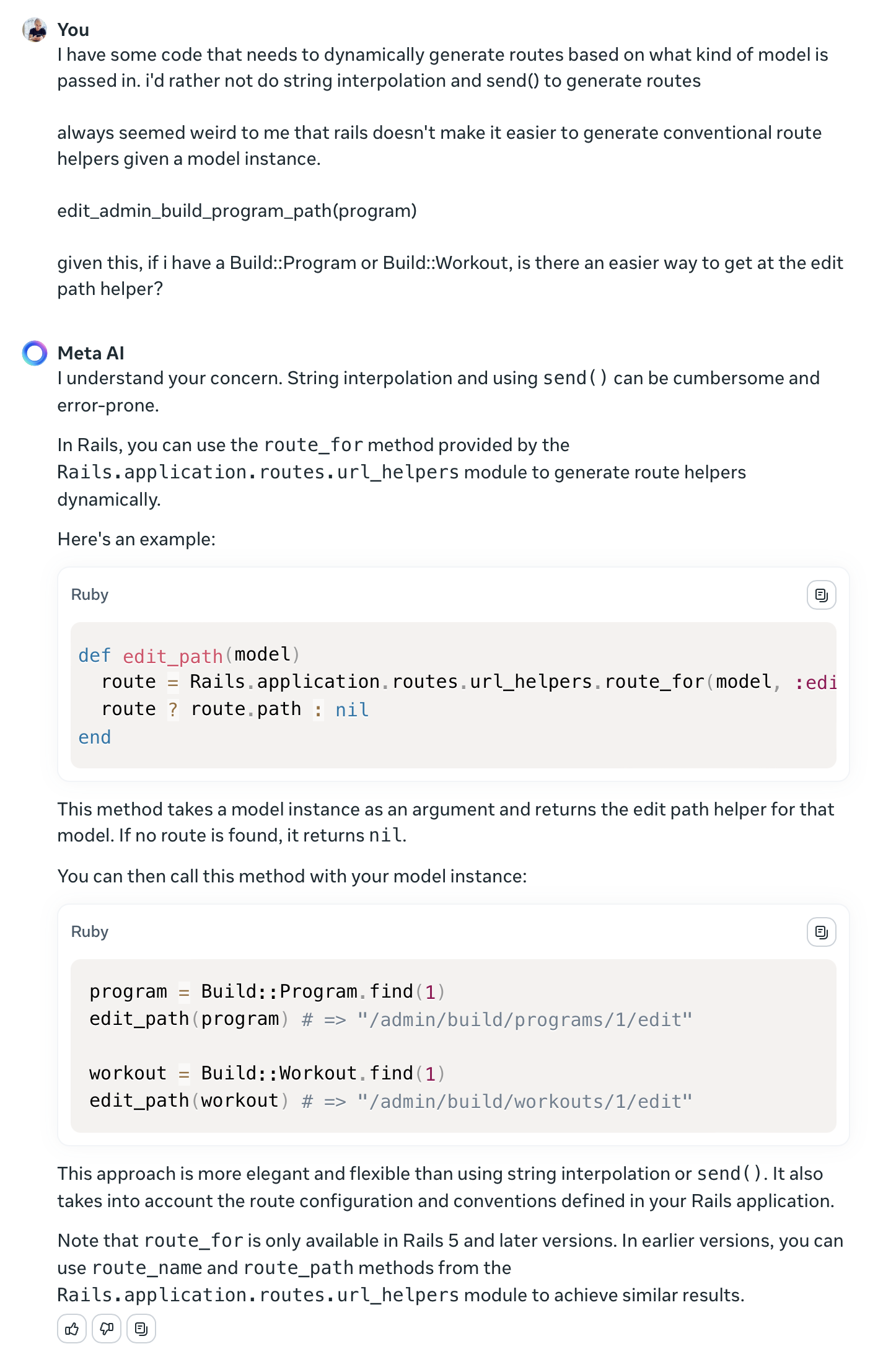

Here's the first question I asked, pondering a less messy way to generate URL paths (secretly knowing how hard this is, given that Rails models and controllers are intentionally decoupled):

Llama is confidently hallucinating here. Its recommendation of calling

url_helpers.route_for(model, :edit) doesn't work, because that's not what the

method is

for.

To be fair, that's a pretty understandable hallucination, given that it clearly wants to fire back an authoritative answer to a question that (in reality) lacks a clear answer. And there's indeed a method that's kinda-sorta related to the task.

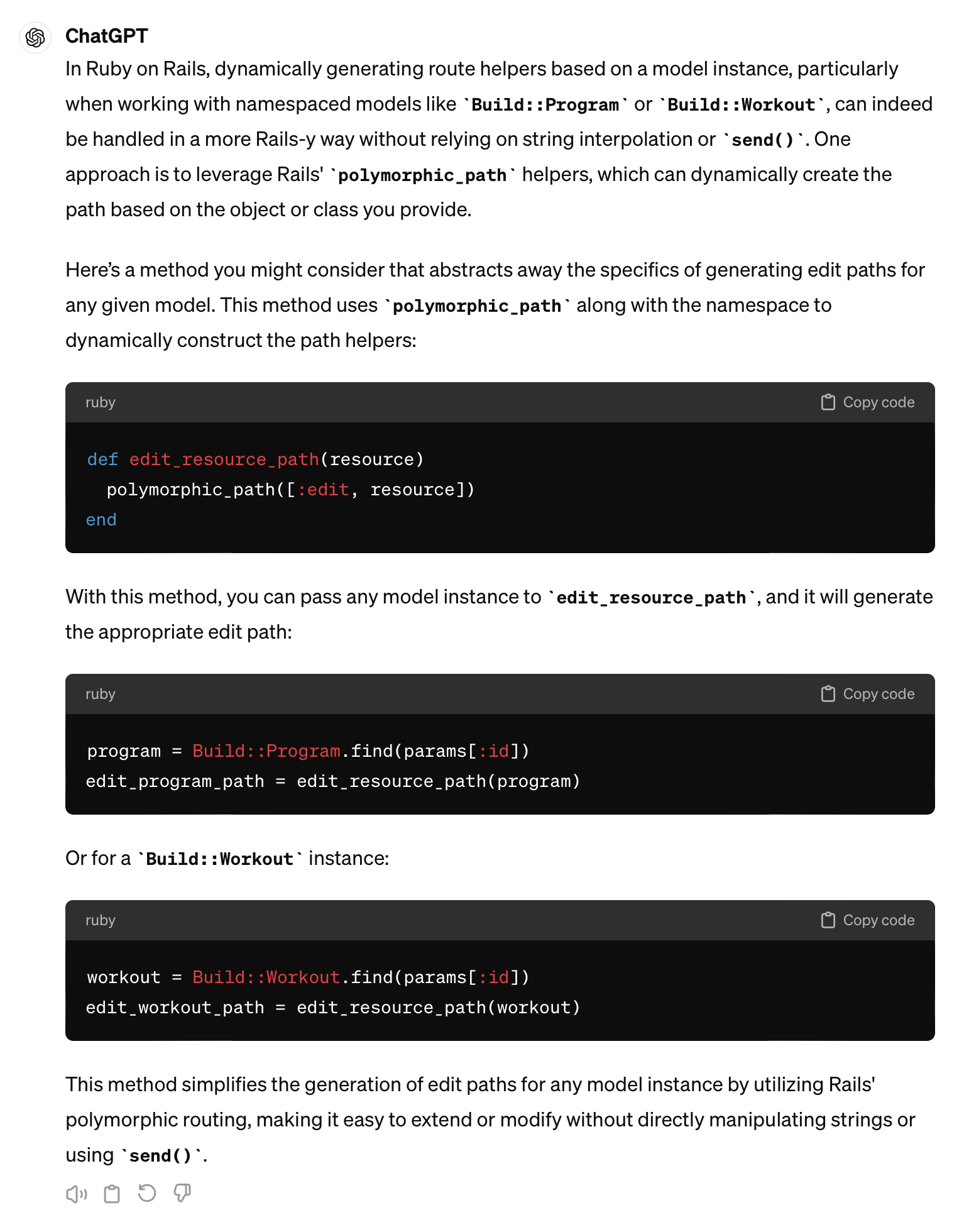

When I asked GPT-4, I got a much more plausible answer:

This still doesn't work in my case (because the path I was thinking of is unconventionally namespaced), but it has the benefit of existing for the stated purpose and not raising an error when naively invoked.

GPT: 1, Llama: 0

Question 2

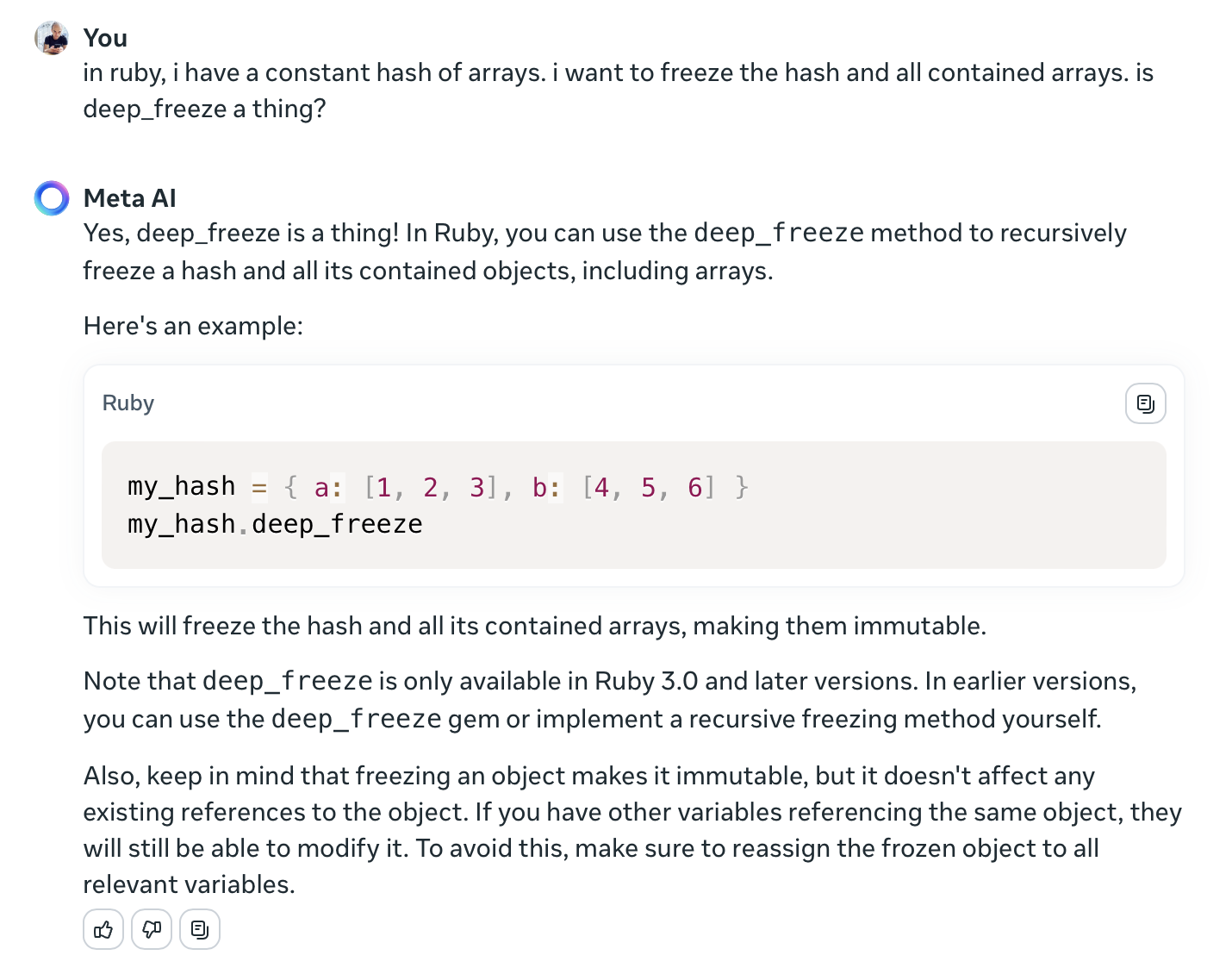

Here's the second question I asked:

Of course, deep_freeze is not a thing. It has been discussed a number of

times in redmine, but never

implemented.

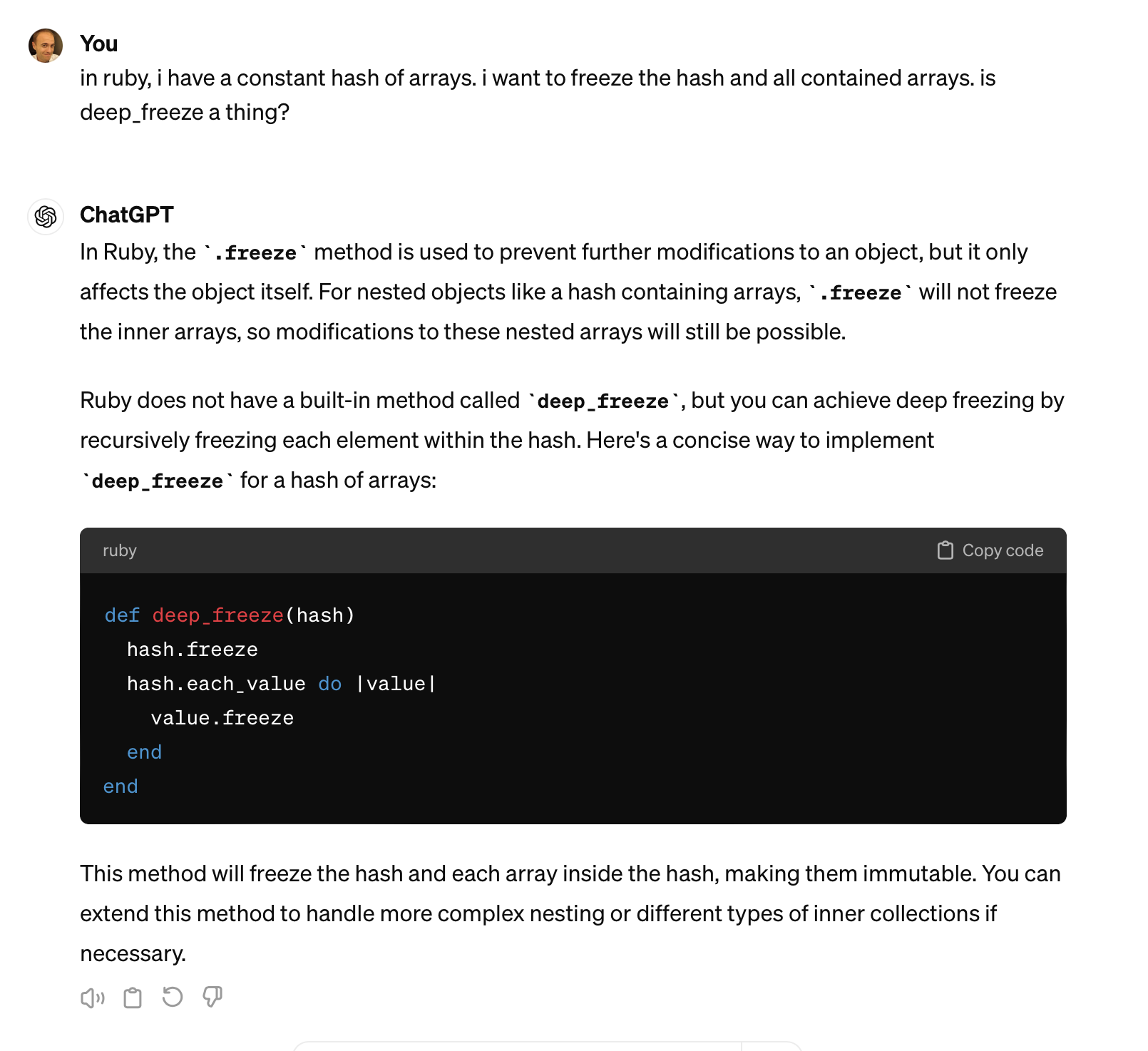

Asking ChatGPT the same thing:

GPT's answer is clearly superior. I wouldn't actually use this code, because it would only work for one layer of nesting and because half a dozen open-source gems exist for this express purpose, but it definitely beats Llama 3.

GPT: 2, Llama: 0

Question 3

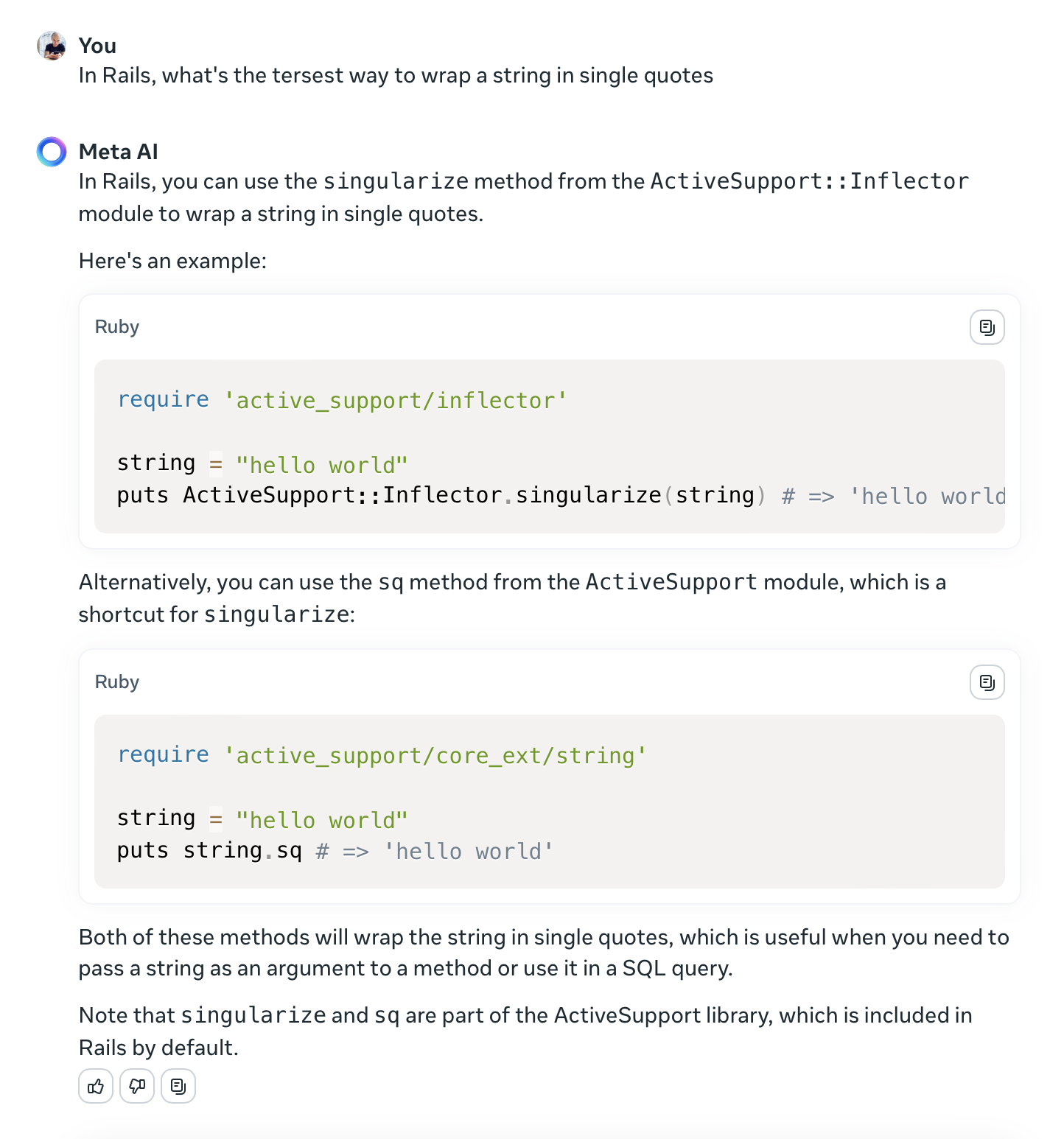

Here's my 3rd and final question (because I gave up, because Llama 3 sucks at coding):

This response makes absolutely no sense. The first code suggestion doesn't wrap the string in single quotes, but rather attempts to make the string singular (as in "not plural"). Clearly Llama 3 failed to parse my request.

The second code block is even more egregious, as there is no such sq

method—and even if there were, what would it stand for? "Single quote"? Llama 3

is clearly unaware that there's any difference at all between a single-quoted

string and a singular inflection of a word.

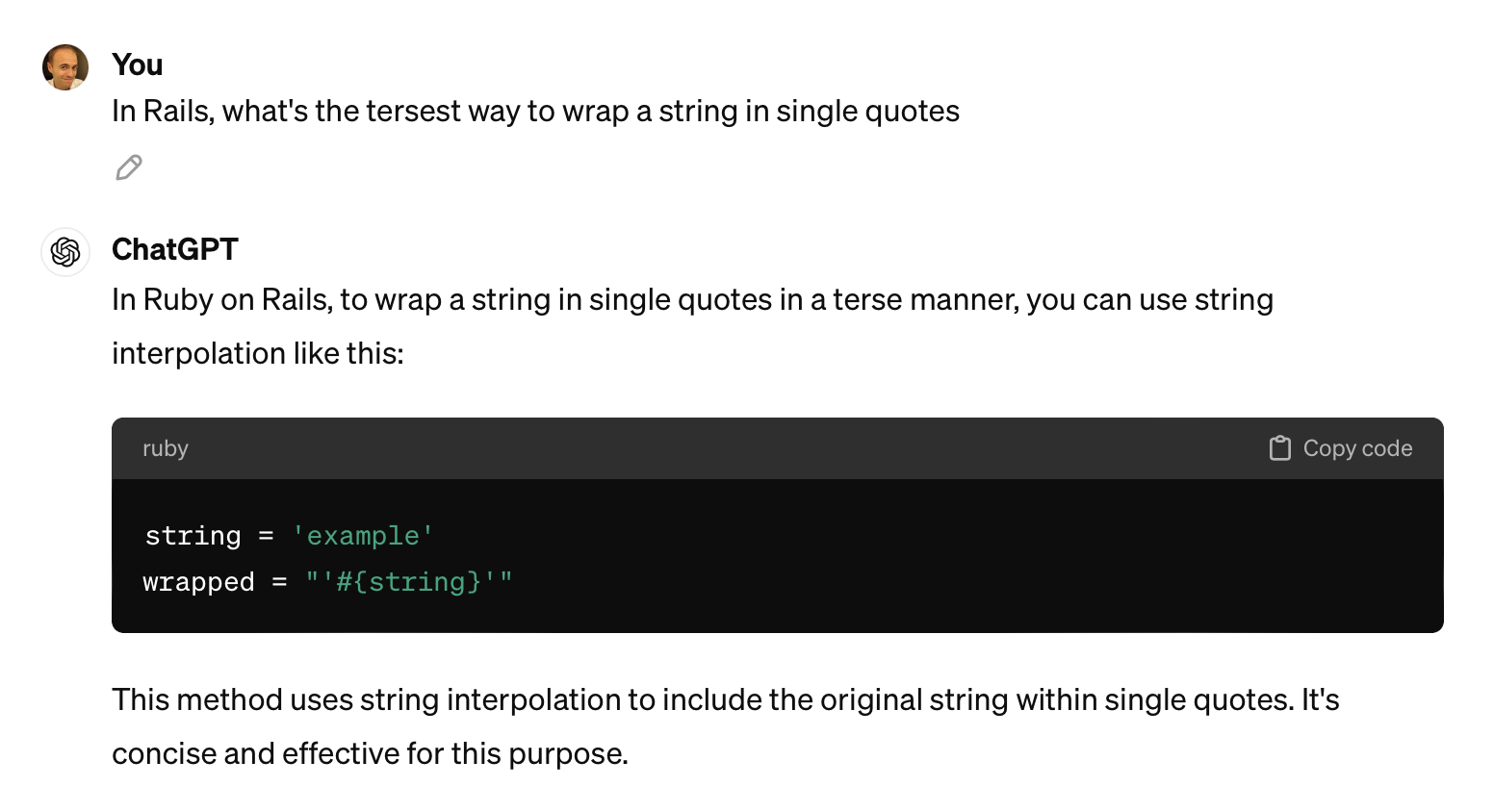

Once again, ChatGPT gave me a better answer:

What I was searching for here was, "is there something like

some_string.inspect I could call that would wrap the string in single-quotes

instead of double-quotes?" The truth is that there isn't, and GPT-4 respected

the absence of any such API by pointing me to

interpolation instead.

GPT: 3, Llama: 0

Welp!

Clearly, Meta's big chatbot coming out party yesterday was a successful bit of marketing, but it remains to be seen how long it will take for Llama to be useful for even pedestrian coding tasks. I'm mad that I wasted my time even trying it, much less writing this up for you.

I agree with John Gruber that OpenAI lacks a moat, but what they still have is a pretty significant head start in the "server-side large-language model" market. Meta and Google are still clearly playing catch-up there. In the long-term, though, OpenAI really does need to figure out a way to avoid being outflanked by the larger platforms.

In the short term, I suspect Apple is much better positioned to knock the socks off the "client-side large-language model" market, in part because nobody else is really trying. Looking forward to WWDC, I suspect Apple will have a pretty firm "no chatbots" rule—both for their internal apps as well as for third-party apps that take advantage of any new client-side LLM-based APIs. I bet Apple's going to try to force app developers to use these technologies in much more context-rich ways that enhance real application features, instead of each lazily creating chat windows that will only set users up for disappointment and frustration.