Brand-new Rails 7 apps exceed Heroku's memory quotas

Update: Judging by this commit and the current status of main, this should be fixed for Rails 8. Great PR thread about this, by the way.

In the history of Ruby on Rails, one of the healthiest pressures to keep memory

usage down has been the special role Heroku has

played as

"easiest place to get started hosting Rails apps". In general, Rails Core and

Heroku's staff have done a pretty good job of balancing the eternal tension

between advancing the framework while still making sure a new app can

comfortably run on Heroku's free (or now,

cheap) "dyno" servers over the

last 17(!) years. Being able to git push an app to a server and have

everything "just work" has always been a major driver of Rails' adoption, and

it's seen as obviously important to everyone involved that such a low-friction

deployment experience—even if developers ultimately move their app elsewhere—is

worth preserving. And that means being cognizant of resource consumption at

every level in the stack.

Anyway, there have been numerous bumps in the road along the way, and I think I just hit one.

I just pushed what basically amounts to a vanilla Rails 7.1.3 app to Heroku and immediately saw this familiar error everywhere in my logs:

Error R14 (Memory quota exceeded)

It started literally minutes after deploying the app. The app was taking up about 520MB. What gives? I even remembered to avoid loading Rails' most memory-hungry component, Active Mailbox!

I spent 30 seconds going down the path of locally benchmarking my memory consumption, but then my experience dealing with stuff like this reminded me that Occam's razor should probably have me investigating default server configuration.

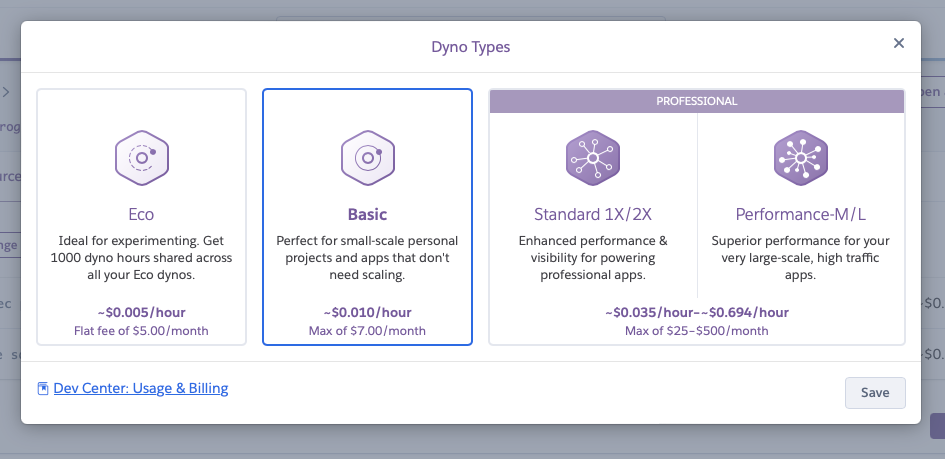

I had one of Heroku's new low-cost servers configured, with a "Basic" dyno to run the web app and another to run Solid Queue:

I had assumed this tier would probably be a 1 or maybe 2 core processor, but Heroku's docs don't explicitly mention the available processor and core counts.

So I fired up a one-off Basic dyno to inspect the environment by running a shell:

# Remember: you can run a shell on a Heroku dyno!

$ heroku run bash

Running bash on ⬢ app... up, run.9833 (Basic)

# Check the processor count:

$ nproc

8

Eight!? That's downright beefy! How many cores?

$ cat /proc/cpuinfo | grep "cpu cores"

cpu cores : 4

cpu cores : 4

cpu cores : 4

cpu cores : 4

cpu cores : 4

cpu cores : 4

cpu cores : 4

cpu cores : 4

So 8 processors, each with 4 cores… not bad for 1¢ an hour! It also immediately explained why things weren't working.

"So why is such a generous processor configuration a problem?," one might ask.

The issue lies with how the preeminent Rails server,

Puma, is configured by default. Look at this

generated configuration in config/puma.rb:

# Specifies that the worker count should equal the number of processors in production.

if ENV["RAILS_ENV"] == "production"

require "concurrent-ruby"

worker_count = Integer(ENV.fetch("WEB_CONCURRENCY") { Concurrent.physical_processor_count })

workers worker_count if worker_count > 1

end

So unless you happen to set an environment variable WEB_CONCURRENCY to specify

how many processes you want to run in production, Puma will default to forking

your Rails app once for every physical processor on your system. Because each

forked process also copies the memory allocated to the process, a default Puma

configuration will fall over in environments that are CPU-rich and RAM-poor, as

Heroku's Basic dynos apparently are.

In the same bash session as above, we can interrogate exactly what that

physical processor count is by asking in irb:

~ $ irb

irb(main):001> require "concurrent-ruby"

=> true

irb(main):002> Concurrent.physical_processor_count

=> 4

So while nproc returned 8 processors, Concurrent.physical_processor_count is

returning 4. Why? In truth, it doesn't matter, because an empty Rails 7.1 app

running on Ruby 3.3 could easily consume 125MB. And multiplying 125 by 4 is all

it takes to run up against Heroku's 500MB memory quota and start spitting out

those R14 errors.

The solution

In this case, you can get all the HTTP throughput you probably need by

increasing thread count (which won't so abruptly raise the floor on your app's

memory consumption) as opposed to processes (which, as we've seen here, will).

Since it's clear I had the headroom to run two workers, that's what I settled

on. Without changing the code or the default configuration, you can dynamically

specify the process count yourself, as mentioned above, by setting the

WEB_CONCURRENCY environment variable, as I did with:

$ heroku config:set WEB_CONCURRENCY=2

Setting WEB_CONCURRENCY and restarting ⬢ app... done, v28

WEB_CONCURRENCY: 2

Of course, this is a "solution", but not much of a solution for users who don't have gobs of experience and institutional knowledge about all the moving parts involved. In order for new developers to have a better out-of-the-box experience with Rails and Heroku, hopefully some combination of Heroku, Rails Core, and Puma's maintainers can figure out how to mitigate this category of failure in the future.