The Startup Shell Game

…the answer usually depends on everything OTHER than whether you're good at your job.

This clip is from v30 of Breaking Change.

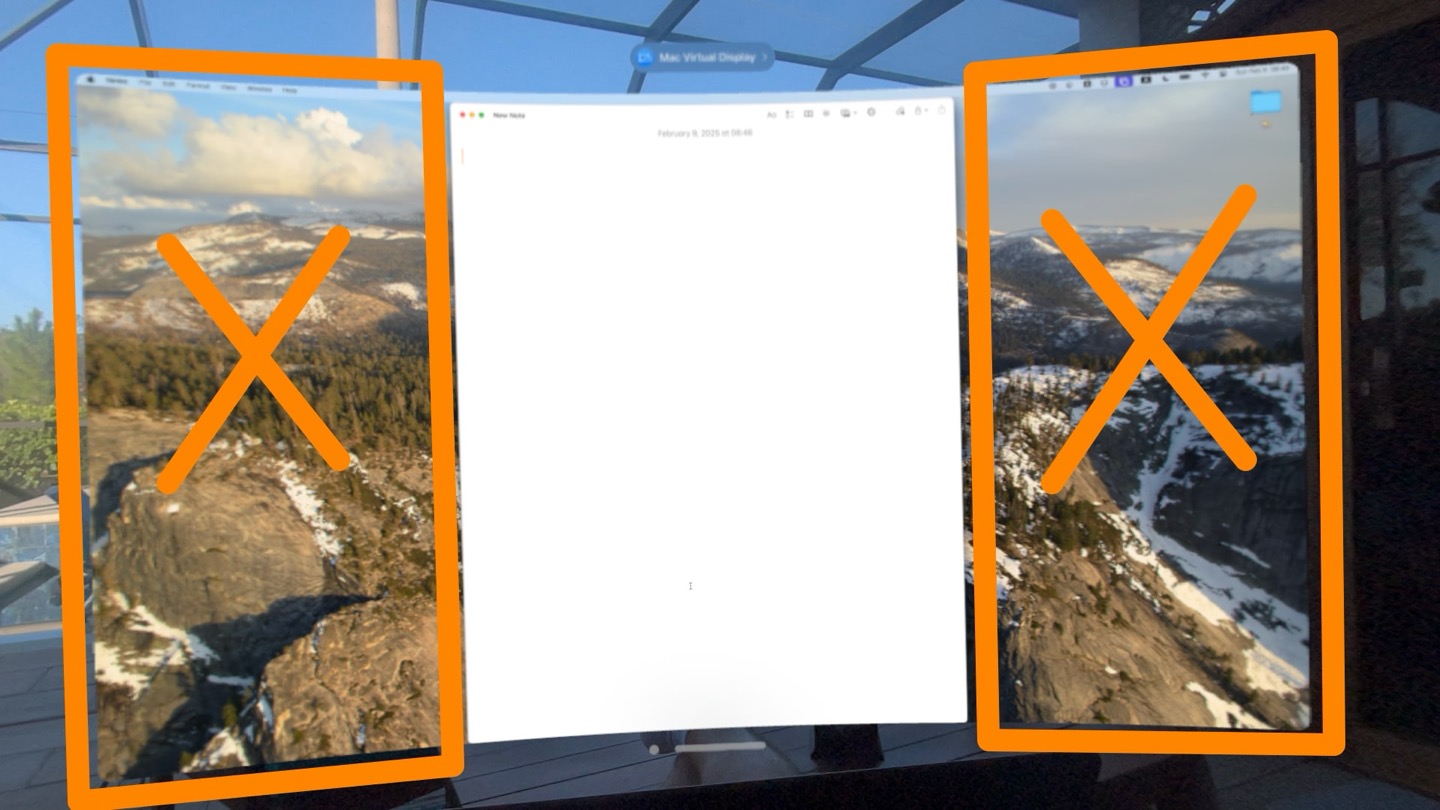

Ultra Narrow View

Vertical monitors for folks working on documents have been a thing for decades — now that Apple Vision Pro supports an 8K-ish ultra wide screen orientation for Mac Virtual Display, I'd love to see custom aspect ratios that allow you to create only as big of a Mac window as you need.

This is the first of what I hope will become a habit of long-form video excerpts from the podcast. This one comes from a section in v30 about DeepSeek and the ramifications it may have for OpenAI and the extent to which it condemns Sam Altman's ideology on how to run a startup.

What this patch has to do with me

This is a copy of the Searls of Wisdom newsletter delivered to subscribers on February 9, 2025.

It's taken me a while to figure out what to write for you that would tie a bow around January 2025. My take on the cultural and political realignment we seem to be experiencing? A deep dive into the video workflows I've been developing as an outgrowth of my Breaking Change podcast? Reflections on the distinct misery of feeling like one's life has regressed in some way, and the counter-productive ways American men react to the sensation of going backwards?

None of those felt quite right.

So I sat on the newsletter and waited for inspiration to strike. Then, out of nowhere, an unextraordinary patch for a developer tool I don't use got merged and—through a convoluted series of coincidences—will likely stand as one of the biggest accomplishments of my life.

I'll explain what I mean by that, but first, here's a picture of my brother and me at brunch in a boat-themed restaurant literally named Boathouse and seated at a table built into an actual fucking boat:

What this patch has to do with me

If you're not a programmer, fear not: I don't intend to get too lost in the technical weeds here. That said, some amount of exposition is necessary to convey why the aforementioned pull request matters.

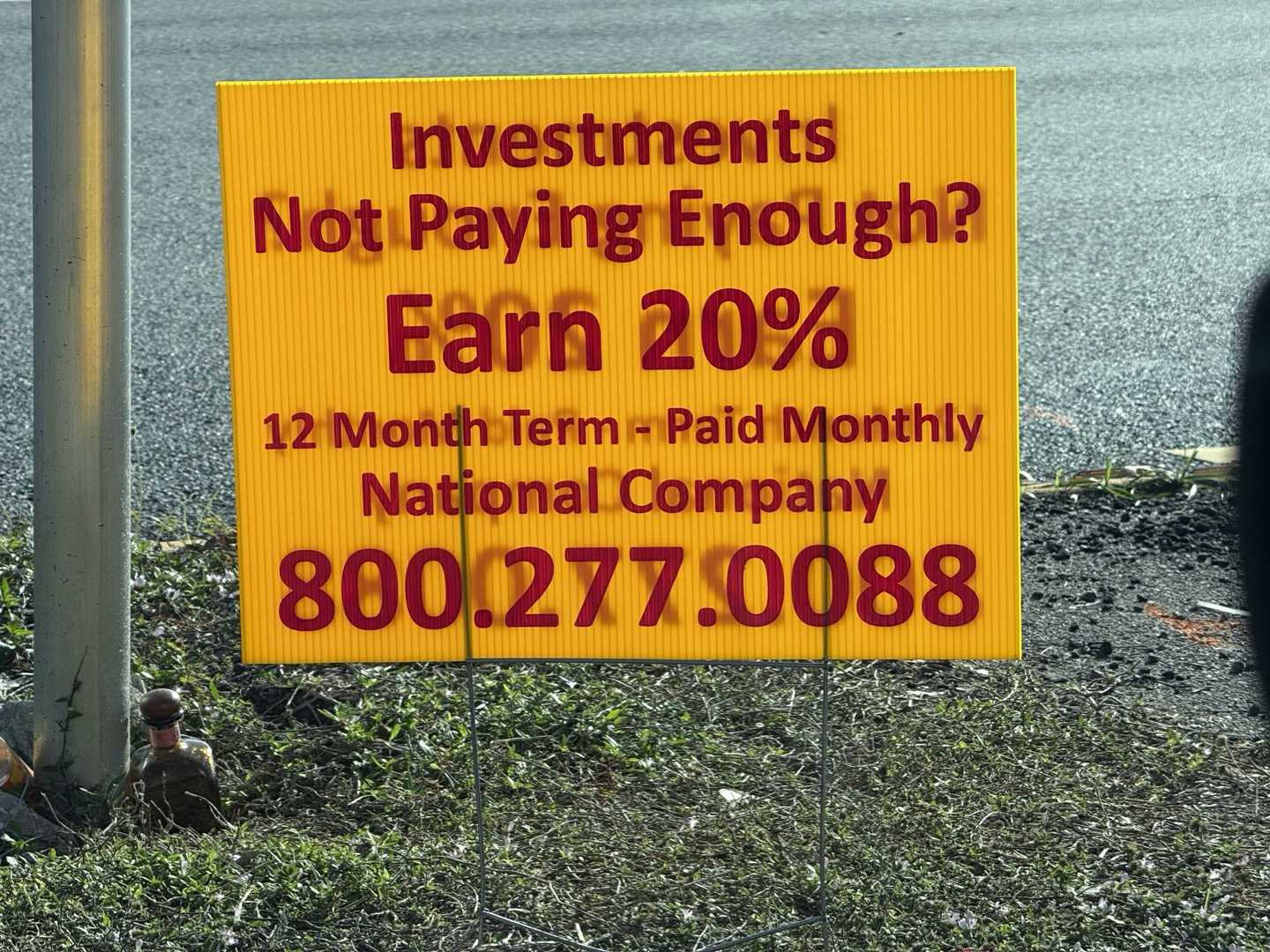

20%? Sign me up!

Why freak out about tariffs and the economy when random signs on the side of the highways in Florida are able to offer such amazing investment returns?

Imagine the entrepreneur who started a UPS franchise 15 years ago with earnest hopes of connecting with the local community by offering services like private mailboxes and consultative shipping options.

Now imagine that person's life as a subcontractor of a subcontractor of Fulfillment by Amazon bound by an ironclad franchising agreement.

The Baby Store

Why I didn't have kids, despite the fact a lot of men seem weirdly OK with pretending they have zero regrets.

This clip is from v30 of Breaking Change.

Rough month for the tech CEO whose future fortunes depend on us all believing AI innovation requires hundreds of billions of dollars of server farms timkellogg.me/blog/2025/02/03/s1

So impressed by my friend Nadia—she was featured on the Today show this morning for her remarkable success building The StoryGraph, which millions of people use to find their next favorite book! today.com/video/meet-the-woman-behind-the-popular-book-app-the-storygraph-231154757691

If you're here for the spicy, good news: you're gettin' it spicy.

I am usually joking, but I mean it this time: I'm going to stop doing this podcast if you don't write in to podcast@searls.co. Give me proof of life, that's all I need. I won't even read it on air if you don't want!

If you're not bored yet, click the links:

- Becky did a reel of our twin birthday cruise

- Aaron's puns, ranked

- Fewer than 200 people bought Resident Evil on iPhone

- Apple Invites and GroupKit are gonna save us from Doodle

- Apple cancels the AR glasses you hadn't heard about

- Android XR is even more of a rip-off than Android was

- Vision Pro is getting Unreal Engine support

- WTF is Deepseek

- My own declaration of competence

- I've started work on my first fuckthis.app, and it's already doing stuff

- Nintendo's Alarmo clock

- The Wild Robot is not as bad as it looks

- Star Trek Unification

- Persona 3 Reloaded

- Ninja Gaiden 2 Black

- Via Prem: Exit 8 and Platform 8

🌶️

Just a few days after launching my TOP SECRET syndication app with Bluesky support, I've written a new adapter for cross-posting to X. Updated the site's POSSE roundup accordingly: justin.searls.co/posse/