28 Allergens Not Detected

Sure this ice cream killed me, but think of all the allergens it didn't have!

Wow, Iran must be really pissed about them adding ads to WhatsApp to be asking their entire population to delete it theverge.com/politics/688875/iran-cutting-off-internet-israel-war

The vast majority of the discourse around the software industry and AI-based coding tools has fallen into one of these buckets:

- Executives want to lay everyone off!

- Nobody wants to hire juniors anymore!

- Product people are building (shitty) prototype apps without developers at all!

What isn't being covered is how many skilled developers are getting way more shit done, as Tom from GameTorch writes:

If you have software engineering skills right now, you can take any really annoying problem that you know could be automated but is too painful to even start, you can type up a few paragraphs in your favorite human text editor to describe your problem in a well-defined way, and then paste that shit into Cursor with o3 MAX pulled up and it will one shot the automation script in about 3 minutes. This gives you superpowers.

I've written a lot of commentary on posts covering the angles enumerated above, and much less about just how many fucking to-dos and rainy day list items I've managed to clear this year with the help of coding tools like ChatGPT, GitHub Copilot, and Cursor. Thanks to AI, stuff that's been clogging up my backlog for years was done quickly and correctly.

When I write code org-name/repo, I now have a script that finds the correct project directory and selects its preferred editor, and launches it with the appropriate environment loaded. When I write git pump, I finally have a script that'll pull, commit, and push in one shot (stopping for a Y/n confirmation only if the changes appear to be nontrivial). I've also finally implemented a comprehensive 3-2-1 backup strategy and scheduled it to run on our Macs each night, thanks to a script that rsyncs my and Becky's most important directories to a massive SSD RAID array, then to a local NAS, and finally to cloud storage.

Each of these was a thing I'd meant to get around to for years but never did, because they were never the most important thing to do. But I could set an agent to work on each of them while I checked my mail or whatever and only spend 20 or 30 minutes of my own time to put the finishing touches on them. All without having to remember arcane shell scripting incantations (since that's outside my wheelhouse).

For now, I only really feel so supercharged when it comes to one-off scripts and the leaf nodes of my applications, but even if that's all AI tools are ever any good for that's still a fucking lot of stuff. Especially as a guy who used his one chance to give a keynote at RailsConf to exhort developers to maximize the number of leaf nodes in their applications.

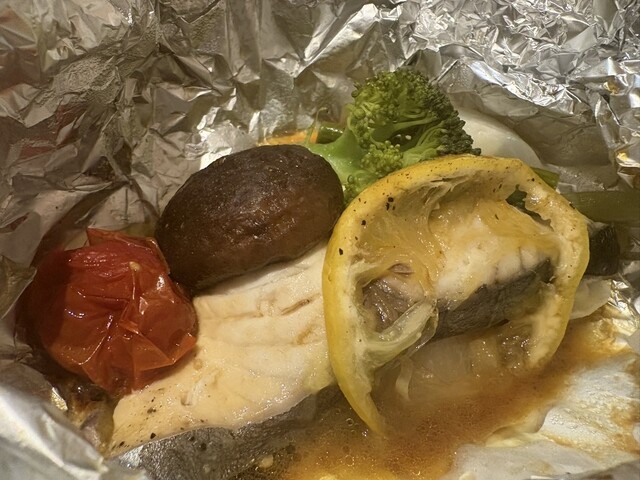

Tabelogged: 新潟古町 而今

Tabelogged: シャモニー 上大川前店

Similar to Kojima, I had to adjust the runtime and prevalence of swears in the Breaking Change podcast because they weren't pissing enough people off videogameschronicle.com/news/composer-says-kojima-changed-death-stranding-2-because-it-wasnt-polarizing-enough/

This week's Vergecast did a great job summarizing the current state of affairs for web publishers grappling with the more-rapidly-than-they'd-hoped impending arrival of "Google Zero." Don't know what Google Zero is? Basically, it describes a seemingly-inevitable future where the number of times Google Search links out to domains not owned by Google asymptotically approaches zero. This is bad news for publishers, who depend on Google for a huge proportion of their traffic (and they depend on that traffic for making money off display ads).

The whole segment is a good primer on where things stand:

My recollection is that everyone could see the writing on the wall as early as the mid-2010s when Google introduced "Featured Snippets" and other iterations of instant answers that obviated the need for users to click links. Publishers had a decade to think up some other way to make money since then, but appear to have done approximately nothing to prepare for a world where their traffic doesn't come from Google.

To the SEO industry, such a world doesn't make sense—you can increase your PageRank one-hundredfold and one hundred times zero is still zero.

To younger workers in publishing, a world without Google is almost impossible to imagine, as it has come to dominate almost every stage of advertising and distribution.

To old-school publishers who can remember what paper feels like, they only recently reached the end of a 20-year journey to migrate from a paid subscription relationship with readers to a free ad-supported situationship with tech platforms that consider their precious content an undifferentiated commodity. Publishers would love to go back, but the world has changed—nobody wants to pay for articles written by people they don't know.

The only people who are thriving are those who developed a patronage followership based on affinity for their individual identities. They've got a Patreon or a Substack and some of the most well-known journalists are making a 5-20x multiple of whatever their salary at Vox or GameSpot was. But if your income depends on the web publishing dynamic as it (precariously) exists today and you didn't spend the last decade making a name for yourself, you are well and truly fucked. Alas.

None of this is new if you read the news about the news.

What is new is that Google is answering more and more queries with AI summaries (and soon, one-shot web apps generated on the fly). As a result, the transition to Google Zero appears to be happening much more quickly than people expected/feared. Despite reporting on this eventuality for a decade, web publishers appear to have been caught flat-footed and have tended to respond with some combination of interminable layoffs and hopeless doom-saying.

This quote from Hemingway's The Sun Also Rises never gets old and applies well here:

"How did you go bankrupt?" Bill asked.

"Two ways," Mike said. "Gradually and then suddenly."

Fortunately, the monetization strategy for justin.searls.co is immune to these pressures, as I'm happy to do all the shit I do for free for some reason.

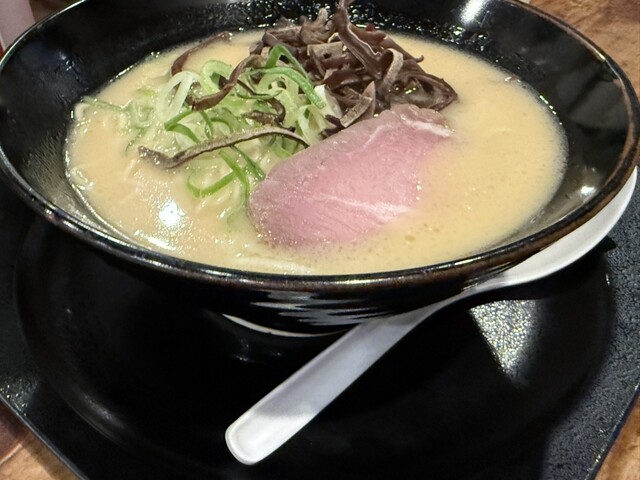

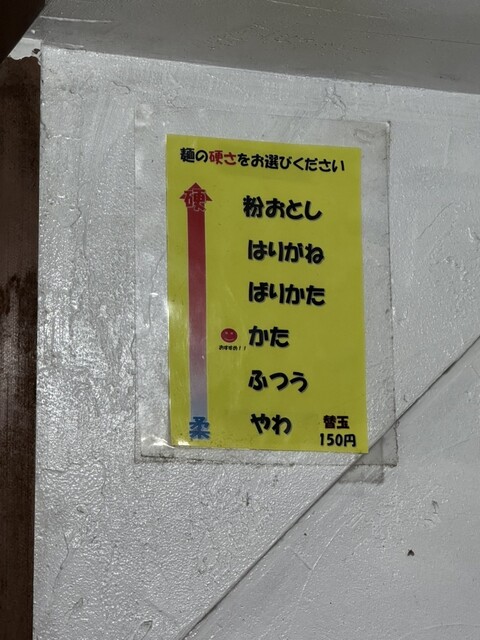

Tabelogged: らーめん 一空

Tabelogged: 華音

Tabelogged: ニイガタ ピッツェリア ベントエマーレ

Tabelogged: 五郎 万代店

Death to roller bags

Nearly all Japan's overtourism woes could be solved overnight if the nation simply outlawed roller bags.

Tabelogged: 鳥焼処 鳥ぼん 本店

Well, I suppose this is one way to fix America's dwindling college enrollment problem:

Across America's community colleges and universities, sophisticated criminal networks are using AI to deploy thousands of "synthetic" or "ghost" students—sometimes in the dead of night—to attack colleges. The hordes are cramming themselves into registration portals to enroll and illegally apply for financial aid. The ghost students then occupy seats meant for real students—and have even resorted to handing in homework just to hold out long enough to siphon millions in financial aid before disappearing.

Bonus points if the chatbots are men, at least.

Jerod and I sat down after WWDC to rifle through Apple's various announcements and dish our takes. As usual, we found a lot to like and (IMNSHO) did a better job than other commentators in ignoring the idle chatter on social media that tends to dominate WWDC discourse in favor of the more meaningful changes the keynote heralds.

You can find it on YouTube:

Appearing on: The Changelog

Recorded on: 2025-06-13

Original URL: https://changelog.com/friends/97

Comments? Questions? Suggestion of a podcast I should guest on? podcast@searls.co

I'm edge cases all the way down

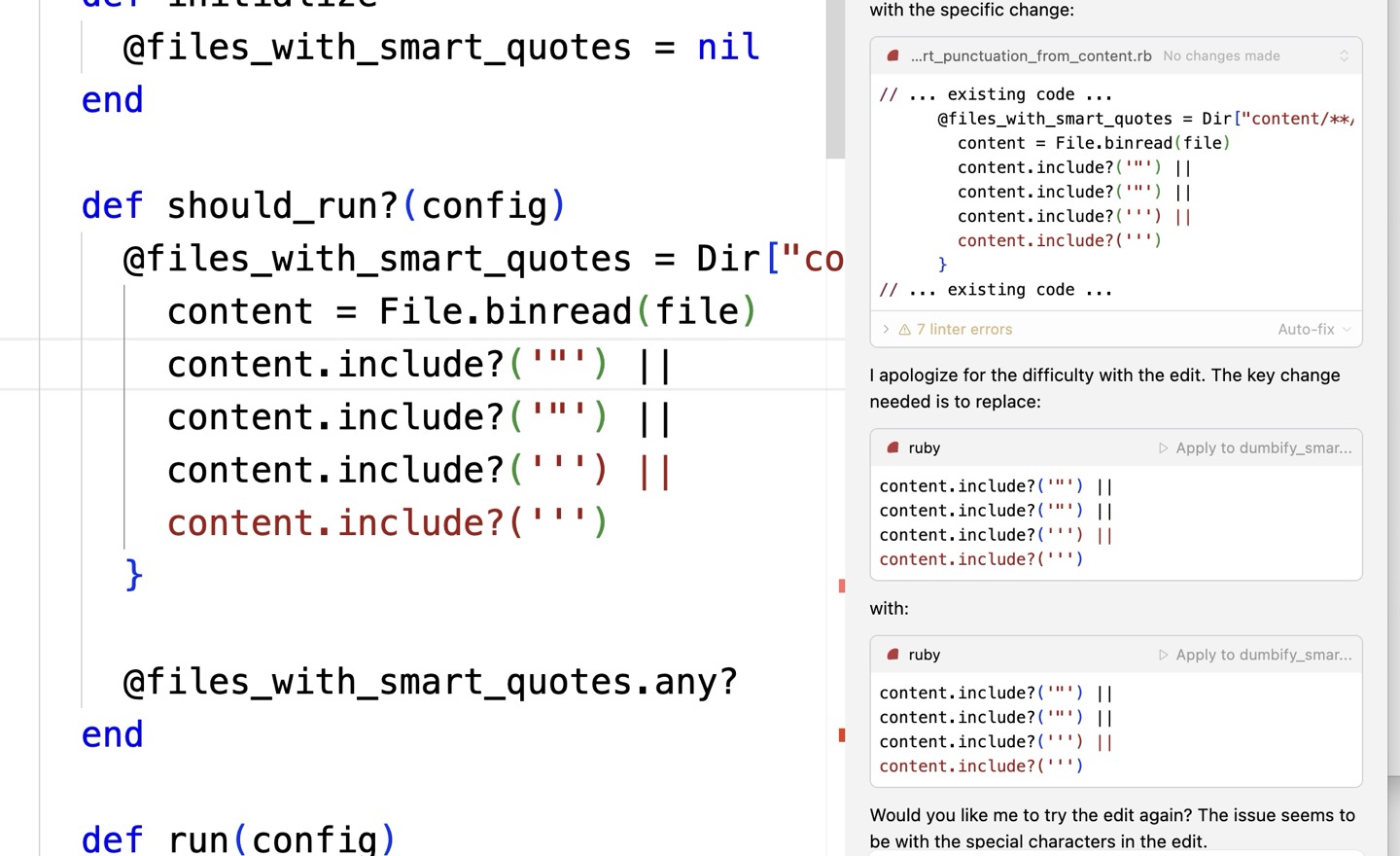

I feel like everything I try to do is so weird that when it doesn't work, I'm very often the first person to run into the bugs I discover, and I just ran into a pretty good example. Pretty sure Cursor ships with system prompts designed to prevent it from inserting smart quotes into code listings, because that would normally be a bug… but it also means the agent is constitutionally incapable of writing a script that searches for and replaces smart quotes.

It has been confused about why it can't type smart quotes for quite a while now. Neat.

Been a fun couple of weeks touring more remote parts of Japan, and I'm happy to report I've now stayed at least one night in 46 of Japan's 47 prefectures. I'm saving the last—Hokkaido—for next year. RubyKaigiかな justin.searls.co/posts/all-the-pretty-prefectures/

In 2011, the same month Todd and I decided to start Test Double, Steve Jobs had recently died, and we both happened to watch Steve Jobs' incredible 2005 Stanford commencement speech. Among the flurry of remembrances and articles being posted at the time, the video of this speech in particular broke through and became the lodestone for those moved by his passing.

The humble "just three stories" structure, the ephemera described in Isaacson's book, and the folklore about Steve's brooding in the run-up to the speech became almost as powerful as his actual words. The fact that Jobs, the ruthlessly focused product visionary and unflinching pitchman, was himself incredibly nervous about this speech might be the most humanizing thing any of us have ever heard about him.

Well, it's been twenty years, and the Steve Jobs Archive has written something of a coda on it. They've also released the e-mails Steve wrote to himself in lieu of proper notes (perhaps the second-most humanizing thing). They've also spruced up and remastered the video of the speech itself on YouTube.

Looking through his e-mails, I found I actually prefer this draft phrasing on the relieving clarity of our impending demise:

The most important thing I've ever encountered to help me make big choices is to remember that I'll be dead soon.

In 2011, Todd and I ran out of good reasons not to take the leap and do what we could to make some small difference in how people wrote software. In 2025, I believe we're now at an inflection point that we haven't seen since then. If you can see a path forward to meet this moment and make a meaningful impact, do it. Don't worry, you'll be dead soon.

I've never regretted failing to succeed; I've only regretted failing to try.