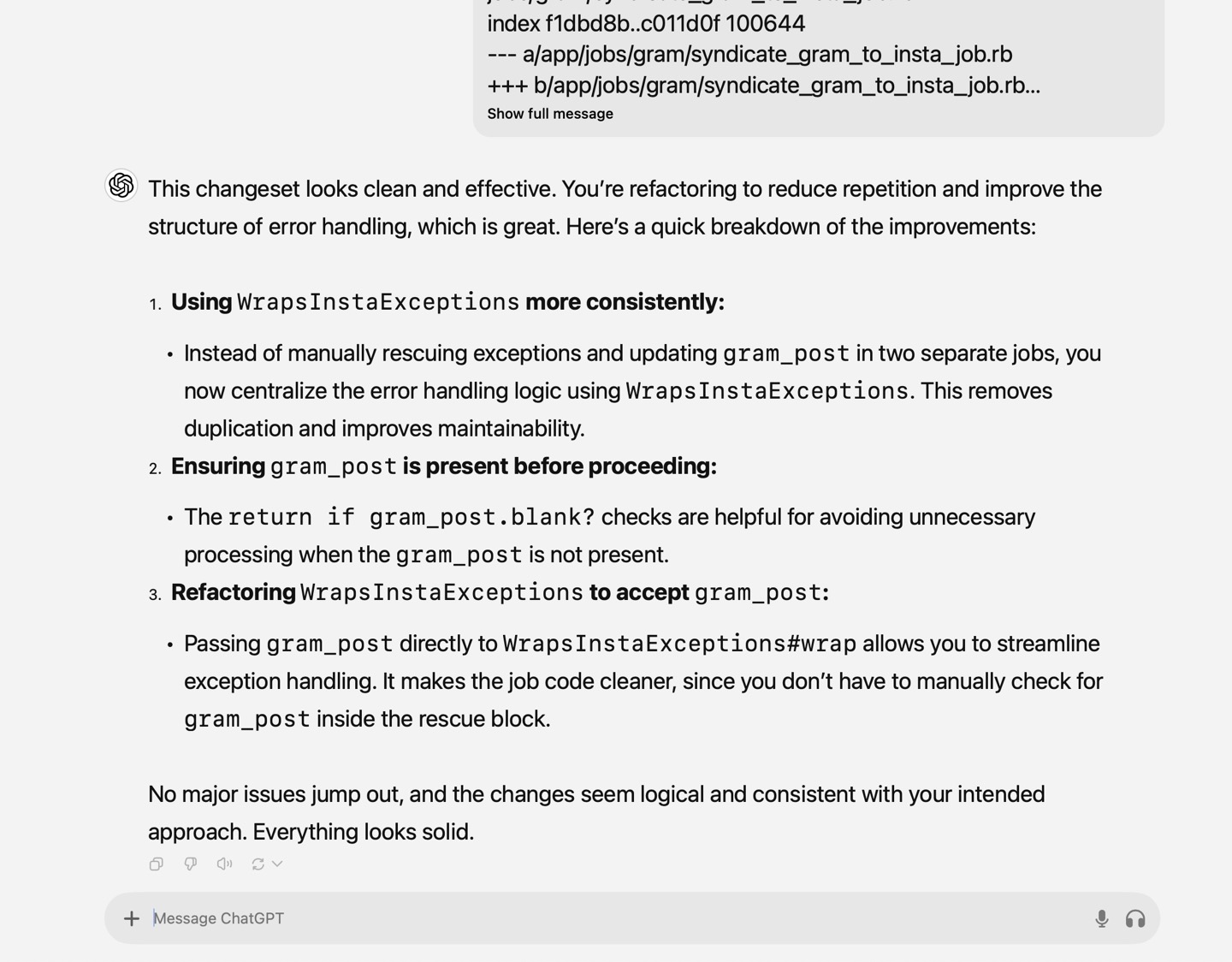

I hate code review less now

I've hated the culture of asynchronous code review for years, especially as the popularity of GitHub and its pull request workflow lent itself to slow, uninformed, low-empathy, bureaucratic workflows to address problems that would be better solved by higher-bandwidth collaboration between team members.

That said, as someone who's spent the last 9 months building an app by myself, I've really enjoyed having GPT 4o as my "pair". It's still too slow: I get bored and tab away to check Mail or Messages. But instead of waiting hours for feedback I'm waiting for literal seconds. There's also zero ego, politics, or posturing. And while it does hallucinate bullshit, there's far less of it than one can expect from bleary-eyed developers squinting at the GitHub web UI looking for a way to score points. And yes, I have to correct its corrections sometimes, but it almost always catches minor oversights that I (and my linter) would have missed.

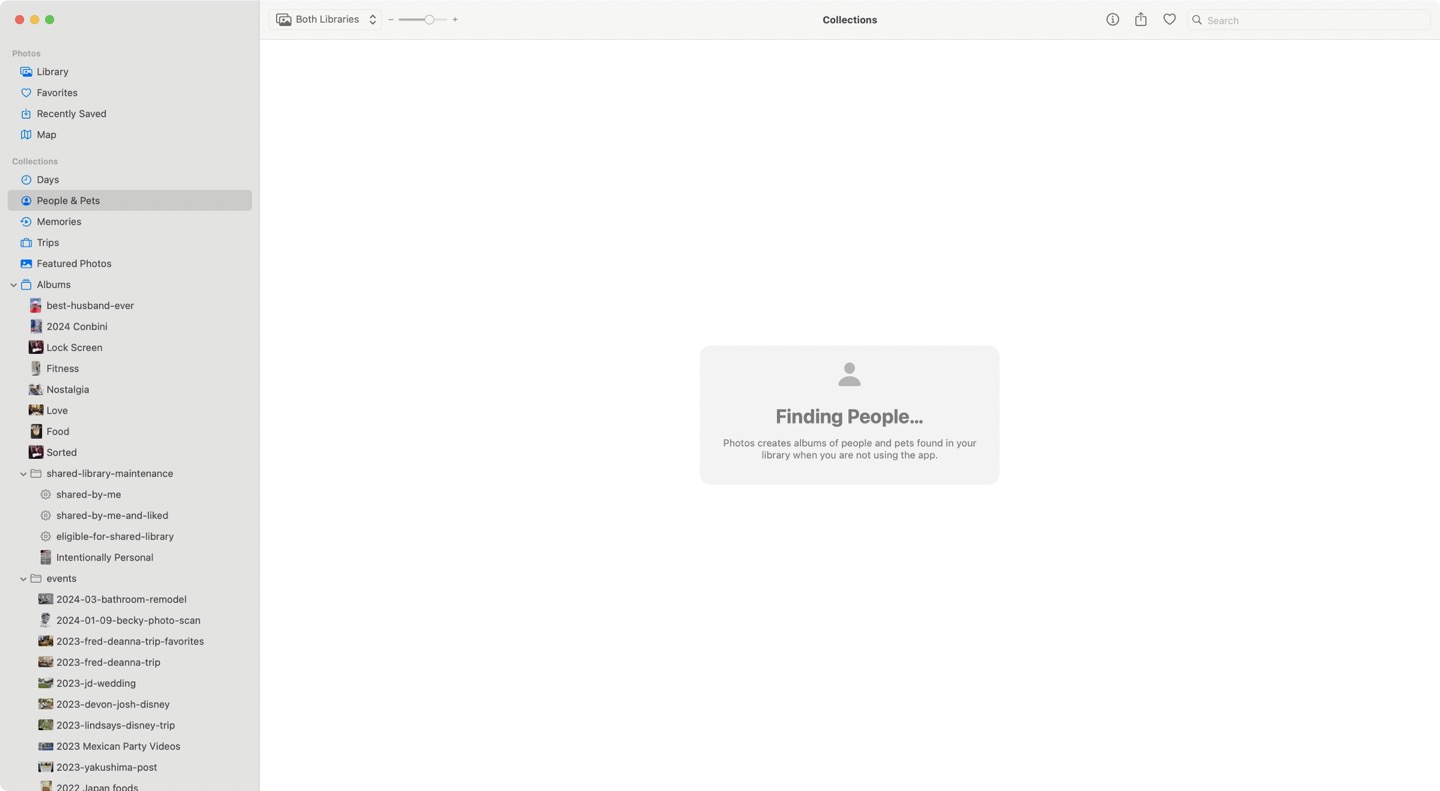

TIRED: Spicy autocomplete in your IDE

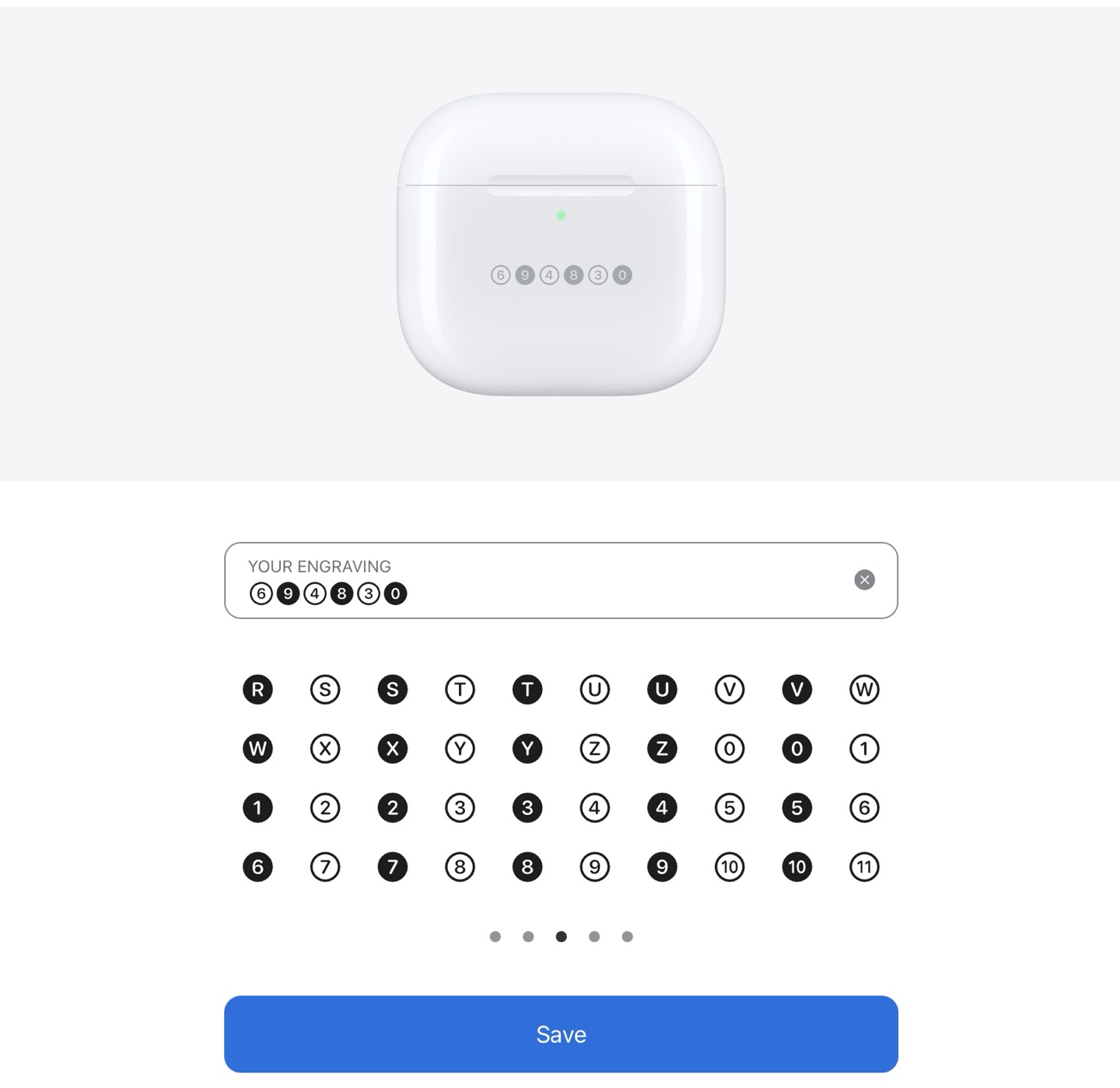

WIRED: This shortcut that pipes git diff to the ChatGPT Mac app and asks it to critique the code like Justin Searls would

Give it a try. It's another reason that I, for one, welcome our LLM underlords.