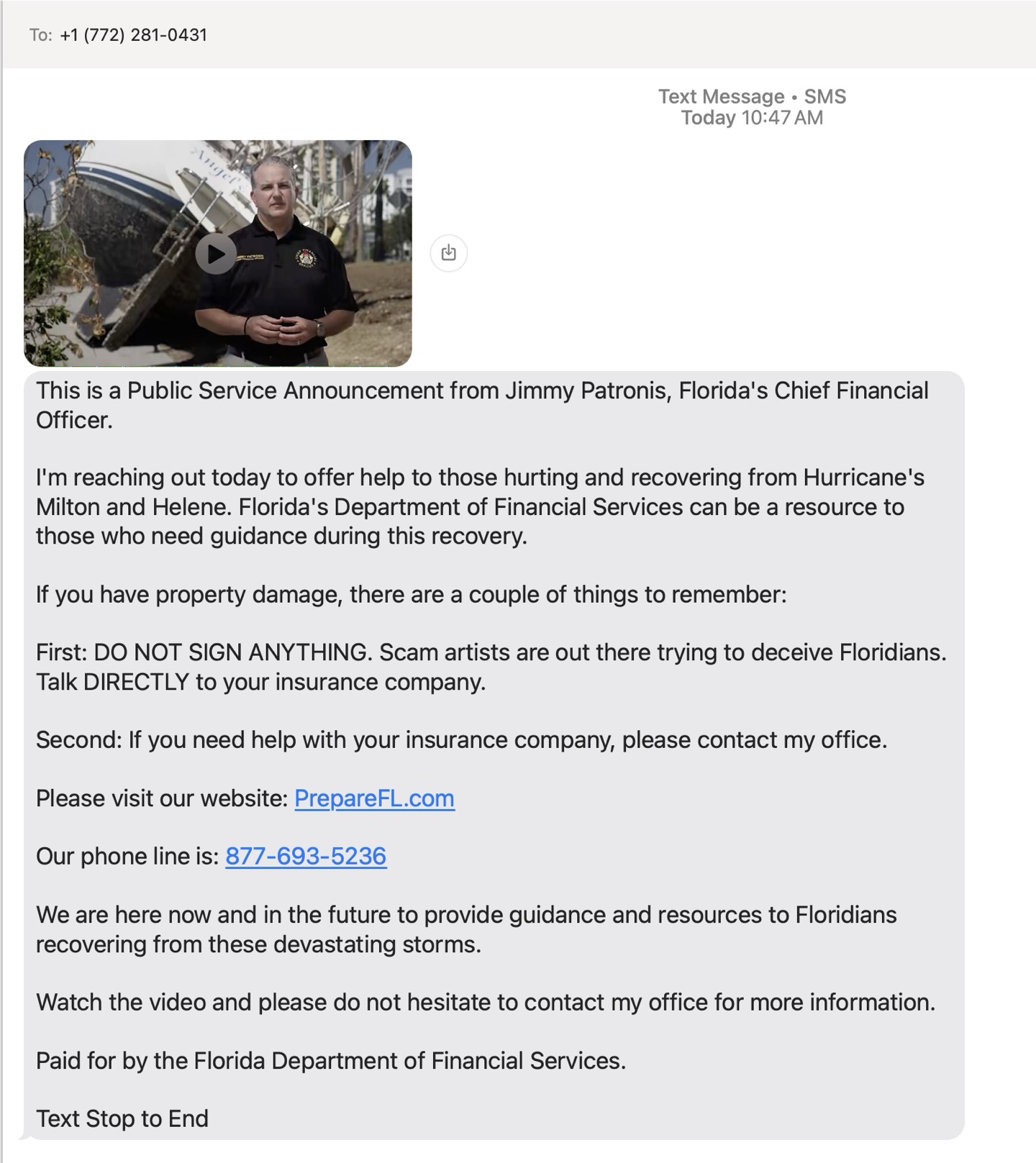

Extremely Legitimate State Government Guy Here Totally Not A Scam Reply STOP to Block

One of the most bizarre and frustrating things about life in Florida is that the

state government has decided to eschew official .gov domains in favor of a

random smattering of .com domains, for seemingly no other reason than

appearing pro-business. Or maybe anti-government? Regardless, it definitely

doesn't make it easier to help constituents avoid scams.

Here's what I had to do to figure out whether this text was legitimate::

- Go to fl.gov which redirects to www.myflorida.com

- Click to see the list of state agencies, which takes you back to dos.fl.gov and lists the Department of Financial Services' homepage as www.myfloridacfo.com

- That homepage indicates Florida really has a "Chief Financial Officer" role (are Florida politicians just LARPing at this point?) and that it matches this guy's name and lists a phone number matching the one in the text message

- Browse around for storm-related pages and find this page which references (but does not hyperlink to) PrepareFL.com

- Visit PrepareFL.com only to realize it redirects back to the previous page

I really wish this was an isolated incident but there are so many public-private partnerships and privatized services in the state, that it's really hard to tell when you're dealing with local and state government and when you're being scammed or phished.

Want to file a new LLC? You'll want to do that at efile.sunbiz.org. Receive a tollbooth fine from the Department of Transportation? Just punch in your credit card over at www.sunpass.com. Need to amend your Beneficial Ownership Information to comply with FinCEN? Just respond to an e-mail from MyFloridaCorporateFilings.com and oh wait nevermind that one's a scam.

For fuck's sake. Why can't y'all be normal?

Can't say I'm surprised to learn it's hard to keep a professional snowboarder away from high-quality powder thedailybeast.com/ex-olympic-snowboarder-ryan-wedding-flees-after-hes-charged-in-murderous-drug-trafficking-scheme/

This version's pun segment goes places

Aaron's reaction to my reading and ranking of his pun submission for the latest version of the Breaking Change podcast

Welcome to this podcast which, by now, you have probably decided you either listen to or don't listen to! And if you don't listen to it, one wonders why you are reading this.

Remember to write in at podcast@searls.co with suggestions for news stories and whatever you'd like me to talk about. Please keep it PG-rated or NC-17 rated. I want nothing in between.

Family-friendly and/or sexually explicit links follow:

I've had a bunch of friends and colleagues ask for my advice on how to best learn Japanese this year, and since (1) the last time I started learning it was over 20 years ago and (2) Tofugu's entire business (via Wanikani) is teaching it to people efficiently, my best advice is to just start with their guide: tofugu.com/learn-japanese/

Fall fashions

New brand of T-shirt (Bella Canvas) for the uniform. Fresh colors to mark the start of what's next.

When we lived in Columbus, Junko and I would meet weekly in person for Japanese conversation practice. She's an awesome, fascinating person, but she also taught me a TON of practical Japanese and wasn't afraid to correct me. She just started giving remote lessons. Strong recommend: popa-japanese-lessons.mystrikingly.com/

Heaven:

- Groceries are delivered by Amazon

- Social media is run by Facebook

- Phones are made by Apple

- Search is indexed by Google

- Games are published by Microsoft

Hell:

- Groceries are delivered by Microsoft

- Social media is run by Apple

- Phones are made by Facebook

- Search is indexed by Amazon

- Games are published by Google

If you're curious why I decided to retire from public speaking last week, or interested to learn what might have been driving the handful of people who were angry about it, sign up for this month's Searls of Wisdom newsletter! justin.searls.co/newsletter/

Why I retired from speaking

This is a copy of the Searls of Wisdom newsletter delivered to subscribers on October 12, 2024.

Hey everyone, have a good September?

Apologies, as most of my top-of-mind thoughts are hurricane-adjacent as I write this:

- That we decided to escape the storm by driving from Orlando to Savannah on Wednesday morning

- That I spent Wednesday night tossing and turning in bed after Milton made landfall, wondering whether I'd be more upset if there was significant damage to the house (and with it, the hassle of months of insurance claims and repairs) or if there was zero impact at all (rendering my 10 hours in the car an unnecessary hedge)

- That, in college, I rented a house on Milton Street we all called "The Milton", and how disappointed I am that none of Orlando's local news affiliates thought to call me to discuss this fascinating human interest story

- That our house is absolutely fine. Didn't even lose power. And my predominant emotional reaction is, predictably, to feel like the drive was a waste of time

Anyway, that's October stuff. And I'm not here to talk about October stuff, because Searls of Wisdom is a publication that happens in arrears. It takes a full month for these insights to coalesce and maturate in the nacre of my self-indulged mind.

So, let's talk about September stuff.

The one thing I'll remember about September 2024 is that it was the month I gave my final conference presentation. After 15 years of speaking at user groups and software conferences, I've decided to hang up the presenter remote. End of an era.

Here's a pic of me and my friends Aaron and Eileen at the RailsConf: World Edition afterparty:

It's been strange developing so many impactful friendships over dozens of seasonal pseudo-vacations sprinkled sporadically throughout my adult life. I've rarely ever visited these friends where they live, or met their families, or seen how they operate outside the predictable plot beats of a conference event. Each relationship a vignette of awkward run-ins at baggage claim and hotel lobbies. Strained catch-ups at noisy speaker dinners and sponsor parties. Warm greetings crossing paths in convention center hallways. Hushed critiques shared from the back of other people's sessions.

I can happily live without attending another conference. But will that mean living without most of these friendships, too?

Yeah, probably.

Below, I'm going to discuss my decision to announce my retirement from public speaking, how people reacted to it, and what the resulting dissonance can tell us about weighing loss aversion against opportunity cost.

Great blog post about how to build a Ruby LSP add-on that I'm totally linking to because it uses my Standard add-on as a case study. railsatscale.com/2024-10-03-the-ruby-lsp-addon-system/

Get ready for a three-hour-plus Breaking Change spectacular! Why is it special? I'm not going to tell you. You'll just have to listen.

Remember, money doesn't change hands when you consume this Content™, but that doesn't make it free! In exchange for downloading this MP3, the license requires you to write in to podcast@searls.co at least once every three episodes. Some of y'all are past due, and I know where to find you.

Want URLs? I got URLs:

Everybody needs a hobby.

Maybe I'll start posting more photos.

gj everyone

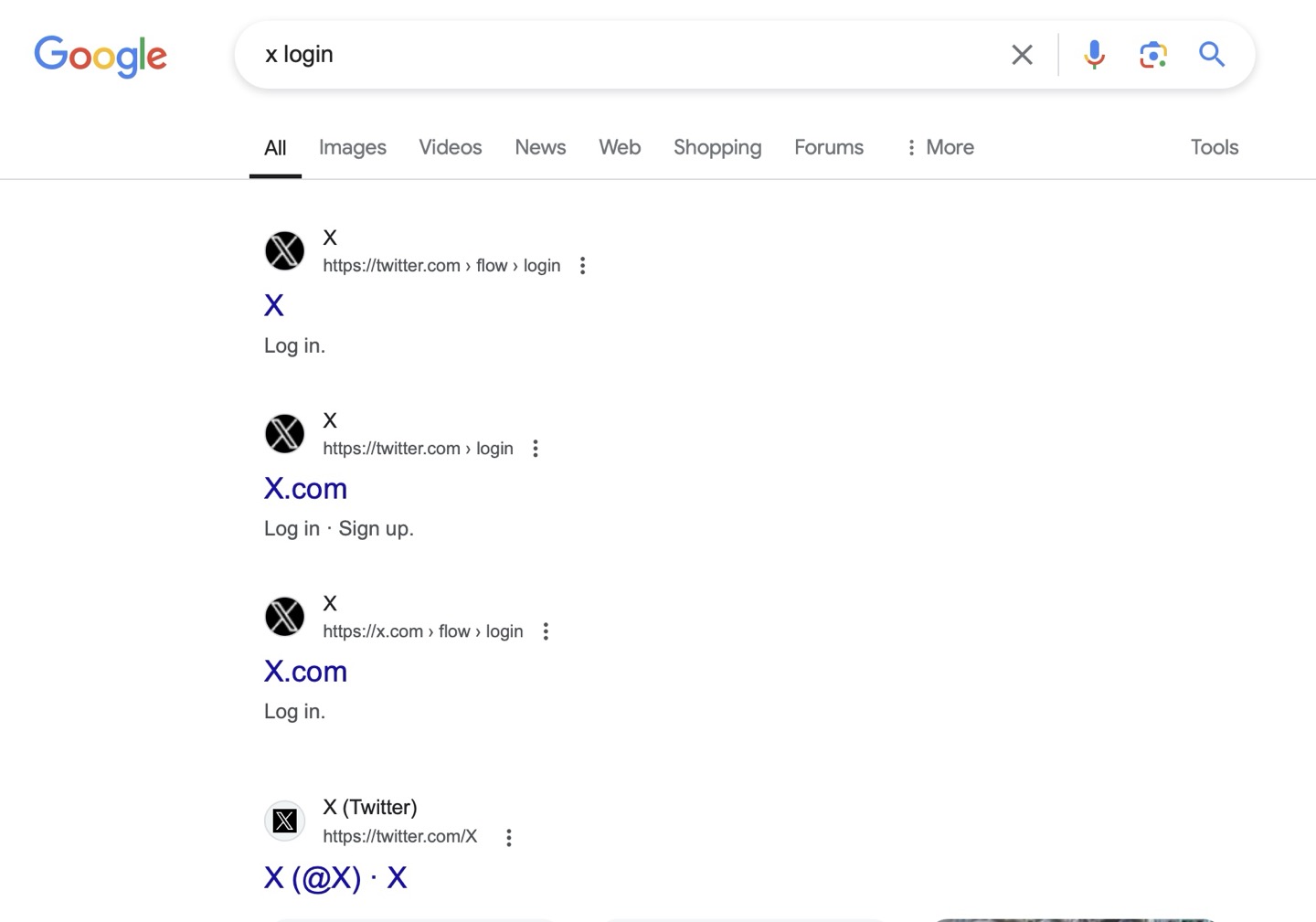

X marks the spot on this SEO.

Are Apple Vision personas… people?

This is some real snake-eating-its-own-tail shit by Apple Photos. What the hell am I supposed to click in order to not screw up its training of Aaron's face?