We are Rainbow

These gummy candies are the first rainbow-themed things I've seen so far this Pride Month.

(I bought them. They were good 🌈)

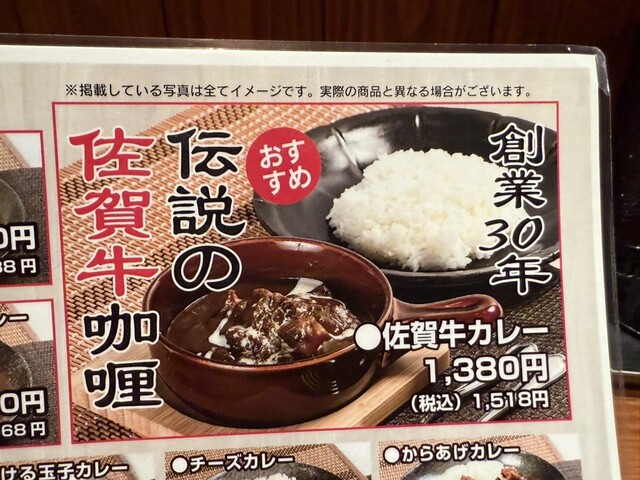

Tabelogged: イエロースパイス

If you've been following me for the last few years, you've heard all about my move to Orlando* and how great I think it is. If, for whatever reason, this has engendered a desire in you to join me in paradise, now's your chance. An actually great house has hit the market in an area where high-quality inventory is exceptionally limited.

Beautiful house. Gated community. Golf course. Lake access. Disney World.

How else do I know this house is good? Because my brother lives there! He's poured his heart and soul into modernizing and updating it since he moved to Orlando in 2022, and it really shows. I suspect it won't sit on the market very long, so if you're interested you should go do the thing and click through to Zillow and then contact the realtor.

As our good friend/realtor Ken Pozek puts in his incredible overview video, this house comes with everything except compromises†:

* Specifically, the Disney World-adjacent part of Orlando

† Okay, one compromise: you'll have to put up with living near me.

Tabelogged: とんかつ とんき

Tabelogged: らーめん矢吹 本店

Tabelogged: 新橋立呑処 へそ 静岡1号店

Video of this episode is up on YouTube:

Spoiler alert: I'm in the same country as I was for v37, but this time from a different nondescript business hotel. Also: I have good personal news! And, as usual, bad news news. I don't get to pick the headlines though, I just read them.

This episode comes with a homework assignment. First, watch Apple's keynote at 10 AM pacific on June 9th. Second, e-mail podcast@searls.co with all your takes. I'd love your help by informing me where my head should be at when I show up on the Changelog next week.

And now, fewer links than usual:

Tabelogged: 静岡 四川飯店

Tabelogged: ななや 静岡店

Since Poolside's (now Poolsuite) iOS app slowly fell apart over the last few years, summer hasn't felt like summer without it. Out of nowhere, a brand new rewrite is out today—and it's a screamer. Never been more excited to float in a pool with a cocktail apps.apple.com/us/app/poolsuite-fm/id1514817810

I mean no offense to Addy here, but isn't most of this advice banal and obvious? How much of "prompt engineering" is just basement-dwelling incels being told they finally need to learn how to communicate effectively? addyo.substack.com/p/the-prompt-engineering-playbook-for

Tabelogged: 石松餃子 アスティ静岡店

Tabelogged: がブリチキン。 草薙駅前店

Tabelogged: 磯魚・イセエビ料理 ふる里

My wish for iOS 26 is that, in preparation for the 20th anniversary all-glass iPhone, the new UI design will pave the way for system-wide pass-through video (just as visionOS uses) so as to feel like your content is the device. No more static background wallpaper. Truly transparent-seeming.

This mode probably won't debut until iPhone 20, however, since it will depend on a higher baseline of camera and sensor hardware (as Vision Pro did).

It's been six months since I posted my eulogy for dad. Just re-read it and cried three times. justin.searls.co/mails/2024-12/