Home Sweet Home

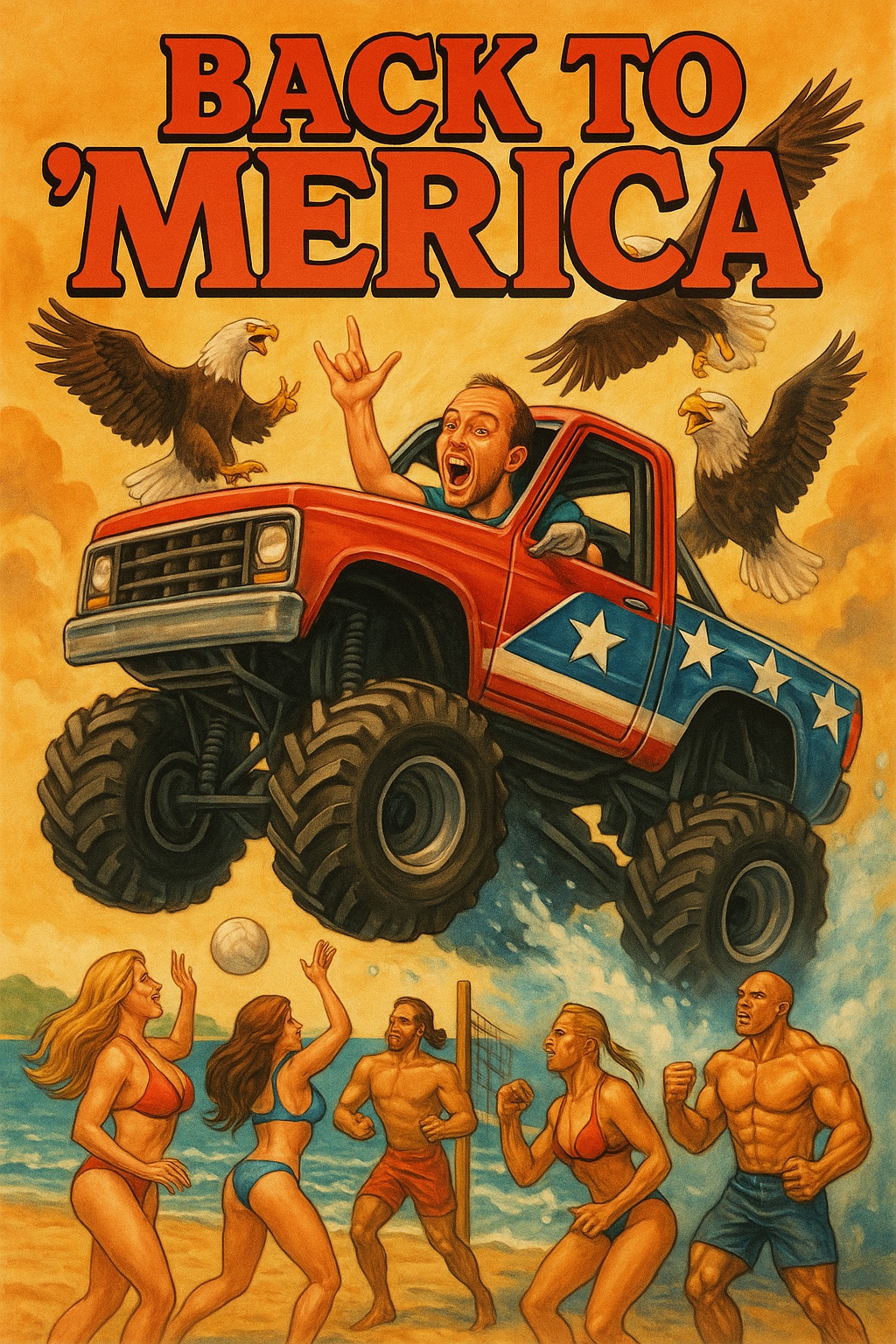

What my Japanese friends imagined when I told them I was headed back to Florida

The T-Shirts I Buy

I get asked from time to time about the t-shirts I wear every day, so I figured it might save time to document it here.

The correct answer to the question is, "whatever the cheapest blank tri-blend crew-neck is." The blend in question refers to a mix of fabrics: cotton, polyester, and rayon. The brand you buy doesn't really matter, since they're all going to be pretty much the same: cheap, lightweight, quick-drying, don't retain odors, and feel surprisingly good on the skin for the price. This type of shirt was popularized by the American Apparel Track Shirt, but that company went to shit at some point and I haven't bothered with any of its post-post-bankruptcy wares.

I maintain a roster of 7 active shirts that I rotate daily and wash weekly. Every 6 months I replace them. I buy 14 at a time so I only need to order annually. I always get them from Blank Apparel, because they don't print bullshit logos on anything and charge near-wholesale prices. I can usually load up on a year's worth of shirts for just over $100.

I can vouch for these two specific models:

The Next Level shirts feel slightly nicer on day one, but they also wear faster and will feel a little scratchy after three months of daily usage. The Bella+Canvas ones seem to hold up a bit better. But, honestly, who cares. The whole point is clothes don't matter and people will get used to anything after a couple days. They're cheap and cover my nipples, so mission accomplished.

Tabelogged: 熟成和牛ステーキグリルド エイジング・ビーフ 横浜店

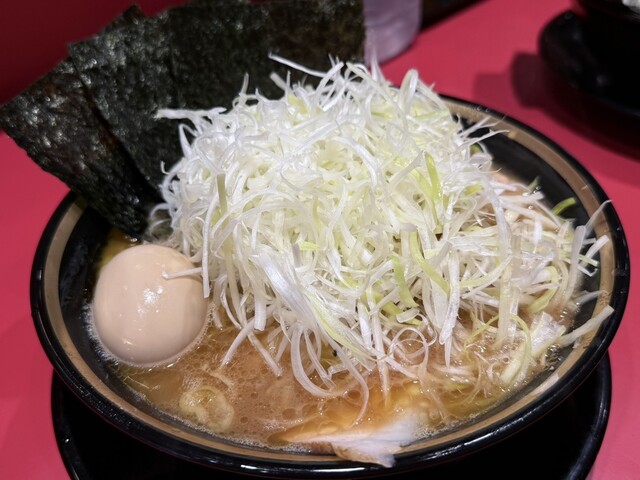

Tabelogged: ラーメン 環2家 川崎店

Tabelogged: 伊太利亜のじぇらぁとや

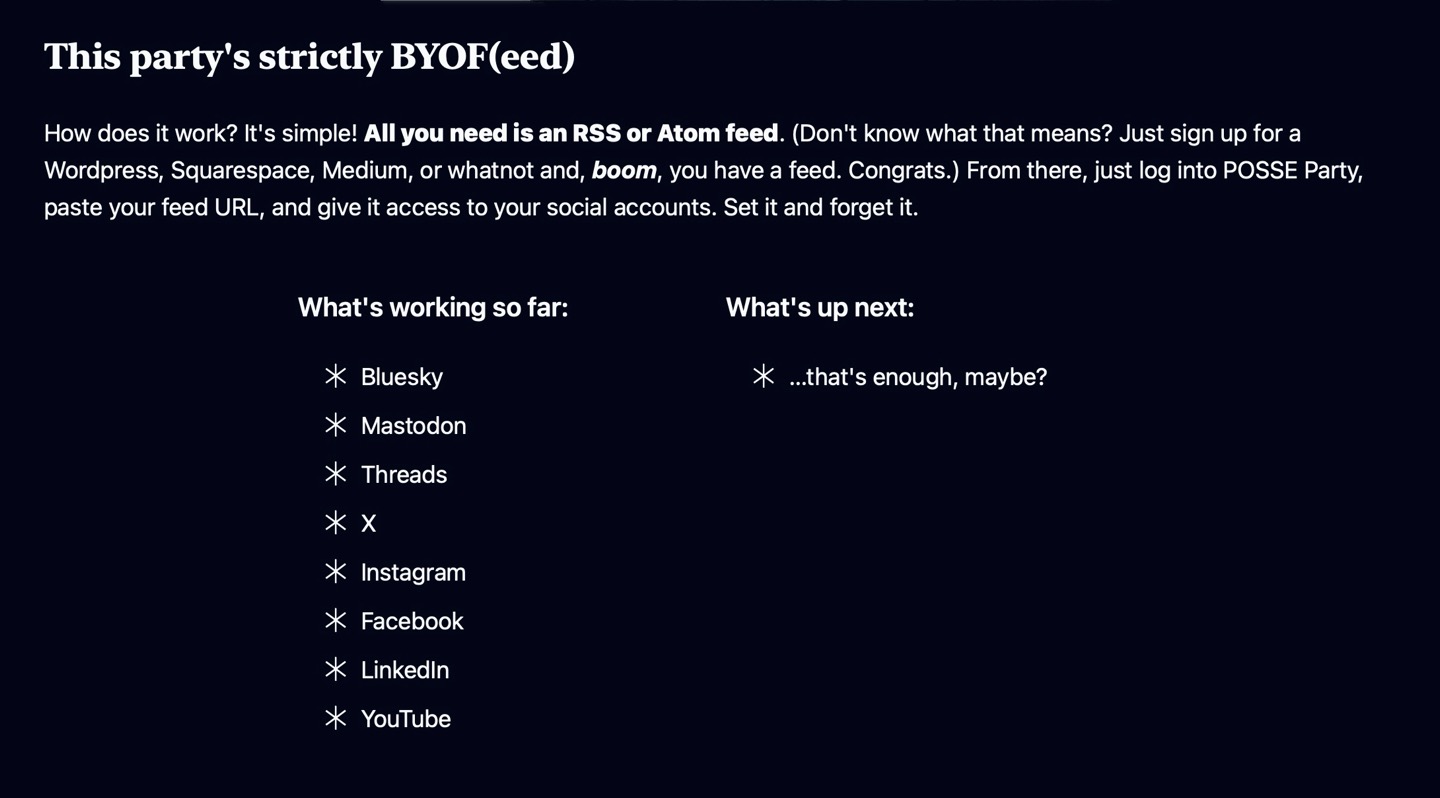

Possy's been busy

Earlier this year, I announced I was working on a Rails app called POSSE Party which allows users to syndicate their website's content to a variety of social platforms simply by reading its RSS/Atom feed.

Well, as of today, POSSE Party officially posts to just about everything I could want it to. This week, I locked myself in a tiny Tokyo apartment and didn't let myself out until I'd finished building support for Instagram, Facebook Pages, LinkedIn, and YouTube. That brings the total number of platforms it supports up to 8. I've updated this site's POSSE Pulse accordingly.

I'm excited and relieved to have realized the vision of what I set out to build. I'll be discussing what's next… soon-ish. Probably.

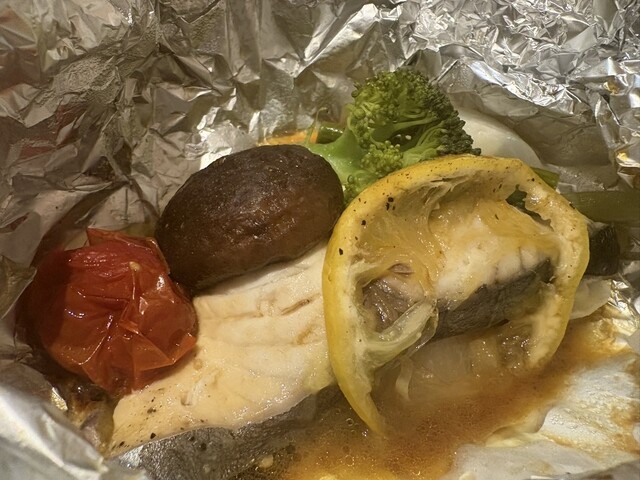

Tabelogged: 魚屋あらまさ 川崎店

Tabelogged: 串かつ でんがな 川崎店

Third-party games selling like garbage at the launch of a Nintendo system? Who could have seen this coming videogameschronicle.com/news/third-party-switch-2-game-sales-have-started-off-slow-with-one-publisher-selling-below-our-lowest-estimates/

Tabelogged: うさぎや 川崎店

Tabelogged: 洋食や 三代目 たいめいけん

28 Allergens Not Detected

Sure this ice cream killed me, but think of all the allergens it didn't have!

Wow, Iran must be really pissed about them adding ads to WhatsApp to be asking their entire population to delete it theverge.com/politics/688875/iran-cutting-off-internet-israel-war

The vast majority of the discourse around the software industry and AI-based coding tools has fallen into one of these buckets:

- Executives want to lay everyone off!

- Nobody wants to hire juniors anymore!

- Product people are building (shitty) prototype apps without developers at all!

What isn't being covered is how many skilled developers are getting way more shit done, as Tom from GameTorch writes:

If you have software engineering skills right now, you can take any really annoying problem that you know could be automated but is too painful to even start, you can type up a few paragraphs in your favorite human text editor to describe your problem in a well-defined way, and then paste that shit into Cursor with o3 MAX pulled up and it will one shot the automation script in about 3 minutes. This gives you superpowers.

I've written a lot of commentary on posts covering the angles enumerated above, and much less about just how many fucking to-dos and rainy day list items I've managed to clear this year with the help of coding tools like ChatGPT, GitHub Copilot, and Cursor. Thanks to AI, stuff that's been clogging up my backlog for years was done quickly and correctly.

When I write code org-name/repo, I now have a script that finds the correct project directory and selects its preferred editor, and launches it with the appropriate environment loaded. When I write git pump, I finally have a script that'll pull, commit, and push in one shot (stopping for a Y/n confirmation only if the changes appear to be nontrivial). I've also finally implemented a comprehensive 3-2-1 backup strategy and scheduled it to run on our Macs each night, thanks to a script that rsyncs my and Becky's most important directories to a massive SSD RAID array, then to a local NAS, and finally to cloud storage.

Each of these was a thing I'd meant to get around to for years but never did, because they were never the most important thing to do. But I could set an agent to work on each of them while I checked my mail or whatever and only spend 20 or 30 minutes of my own time to put the finishing touches on them. All without having to remember arcane shell scripting incantations (since that's outside my wheelhouse).

For now, I only really feel so supercharged when it comes to one-off scripts and the leaf nodes of my applications, but even if that's all AI tools are ever any good for that's still a fucking lot of stuff. Especially as a guy who used his one chance to give a keynote at RailsConf to exhort developers to maximize the number of leaf nodes in their applications.