Why agents are bad pair programmers

LLM agents make bad pairs because they code faster than humans think.

I'll admit, I've had a lot of fun using GitHub Copilot's agent mode in VS Code this month. It's invigorating to watch it effortlessly write a working method on the first try. It's a relief when the agent unblocks me by reaching for a framework API I didn't even know existed. It's motivating to pair with someone even more tirelessly committed to my goal than I am.

In fact, pairing with top LLMs evokes many memories of pairing with top human programmers.

The worst memories.

Memories of my pair grabbing the keyboard and—in total and unhelpful silence—hammering out code faster than I could ever hope to read it. Memories of slowly, inevitably becoming disengaged after expending all my mental energy in a futile attempt to keep up. Memories of my pair hitting a roadblock and finally looking to me for help, only to catch me off guard and without a clue as to what had been going on in the preceding minutes, hours, or days. Memories of gradually realizing my pair had been building the wrong thing all along and then suddenly realizing the task now fell to me to remediate a boatload of incidental complexity in order to hit a deadline.

So yes, pairing with an AI agent can be uncannily similar to pairing with an expert programmer.

The path forward

What should we do instead? Two things:

- The same thing I did with human pair programmers who wanted to take the ball and run with it: I let them have it. In a perfect world, pairing might lead to a better solution, but there's no point in forcing it when both parties aren't bought in. Instead, I'd break the work down into discrete sub-components for my colleague to build independently. I would then review those pieces as pull requests. Translating that advice to LLM-based tools: give up on editor-based agentic pairing in favor of asynchronous workflows like GitHub's new Coding Agent, whose work you can also review via pull request

- Continue to practice pair-programming with your editor, but throttle down from the semi-autonomous "Agent" mode to the turn-based "Edit" or "Ask" modes. You'll go slower, and that's the point. Also, just like pairing with humans, try to establish a rigorously consistent workflow as opposed to only reaching for AI to troubleshoot. I've found that ping-pong pairing with an AI in Edit mode (where the LLM can propose individual edits but you must manually accept them) strikes the best balance between accelerated productivity and continuous quality control

Give people a few more months with agents and I think (hope) others will arrive at similar conclusions about their suitability as pair programmers. My advice to the AI tool-makers would be to introduce features to make pairing with an AI agent more qualitatively similar to pairing with a human. Agentic pair programmers are not inherently bad, but their lightning-fast speed has the unintended consequence of undercutting any opportunity for collaborating with us mere mortals. If an agent were designed to type at a slower pace, pause and discuss periodically, and frankly expect more of us as equal partners, that could make for a hell of a product offering.

Just imagining it now, any of these features would make agent-based pairing much more effective:

- Let users set how many lines-per-minute of code—or words-per-minute of prose—the agent outputs

- Allow users to pause the agent to ask a clarifying question or push back on its direction without derailing the entire activity or train of thought

- Expand beyond the chat metaphor by adding UI primitives that mirror the work to be done. Enable users to pin the current working session to a particular GitHub issue. Integrate a built-in to-do list to tick off before the feature is complete. That sort of thing

- Design agents to act with less self-confidence and more self-doubt. They should frequently stop to converse: validate why we're building this, solicit advice on the best approach, and express concern when we're going in the wrong direction

- Introduce advanced voice chat to better emulate human-to-human pairing, which would allow the user both to keep their eyes on the code (instead of darting back and forth between an editor and a chat sidebar) and to light up the parts of the brain that find mouth-words more engaging than text

Anyway, that's how I see it from where I'm sitting the morning of Friday, May 30th, 2025. Who knows where these tools will be in a week or month or year, but I'm fairly confident you could find worse advice on meeting this moment.

As always, if you have thoughts, e-mail 'em.

It's crane games all the way down

Finally, a crane game where the prize is another crane game.

With any luck this take will be published in the future (my future, your present) automatically, thanks to the diligent efforts of the loyal employees of Searls LLC and as demonstrated in this example repo github.com/searls/static-site-enhancement-concept

Forbidden Button

I have never wanted to press a button more than I want to press this button

To any AI skeptics out there: if you take the time to use the latest tools and find them to be a net negative on your output, I completely respect that. I've been using LLMs for coding since late 2022 and until Copilot Agent landed in VS Code, I definitely think the benefit was a wash at best. Now I can see a real acceleration boost.

If you refuse to use these tools on ethical grounds or simply don't bother to keep up with them, I fear your employment prospects are likely to suffer in the short and medium term.

Like a Yakuza

Was hunkered down at a cafe in Yokohama's Chinatown earlier this week while waiting for Becky to finish a workout and looked up from my Steam Deck to notice I was simultaneously standing under the same gate in Like a Dragon: Yakuza's

Video of this episode is up on YouTube:

Coming to you LIVE from a third straight week of Japanese business hotels comes me, Justin, in his enduring quest to figure out how to exchange currency for real estate in the land of the rising fun.

[Programming note: apologies, as the audio quality at the beginning of the podcast suffered because I fucked up and left the hotel room's air conditioner on (I caught it and fixed it from the pun section onward)]

Had a few great e-mails to read through this week, but now I'm fresh out again! Before you listen, why not write in a review of this episode? podcast@searls.co and tell me about how amazing it will be before it lets you down like your best friend and/or workplace mentor and/or parent figure.

Href time:

TIL you can (re)set a sleep timer with Siri. Listening to a podcast while falling asleep and my timer paused playback before I nodded off. Clicked the AirPod stem to resume playback and said:

"Siri set a sleep timer for 15 minutes"

This actually worked! I've been getting up and tapping my device like an idiot all these years.

All the Pretty Prefectures

2025-06-13 Update: Miyazaki & Kochi & Tokushima, ✅ & ✅ & ✅!

2025-06-07 Update: Staying overnight in Nagasaki, which allows me to tighten up my rules: now only overnight stays in a prefecture count!

2025-06-06 Update: Saga, ✅!

2025-06-02 Update: Fukui, ✅!

2025-06-01 Update: Ibaraki, ✅!

2025-05-31 Update: Tochigi, ✅!

2025-05-29 Update: Fukushima, ✅!

2025-05-28 Update: Yamagata, 🥩!

2025-05-25 Update: Gunma, ✅!

2025-05-24 Update: We can check Saitama off the list.

So far, I've visited 46 of Japan's 47 prefectures.

I've been joking with my Japanese friends that I'm closing in on having visited every single prefecture for a little while now, and since I have a penchant for exaggerating, I was actually curious: how many have I actually been to?

Thankfully, because iPhone has been equipped with a GPS for so long, all my photos from our 2009 trip onward are location tagged, so I was pleased to find it was pretty easy to figure this out with Apple's Photos app.

Here are the ground rules:

- Pics or it didn't happen

- Have to stay the night in the prefecture (passing through in a car or train doesn't count)

- If I don't remember what I did or why I was there, it doesn't count

| 都道府県 | Prefecture | Visited? | 1st visit |

|---|---|---|---|

| 北海道 | Hokkaido | ❌ | |

| 青森県 | Aomori | ✅ | 2024 |

| 岩手県 | Iwate | ✅ | 2019 |

| 宮城県 | Miyagi | ✅ | 2019 |

| 秋田県 | Akita | ✅ | 2024 |

| 山形県 | Yamagata | ✅ | 2025 |

| 福島県 | Fukushima | ✅ | 2025 |

| 茨城県 | Ibaraki | ✅ | 2025 |

| 栃木県 | Tochigi | ✅ | 2025 |

| 群馬県 | Gunma | ✅ | 2025 |

| 埼玉県 | Saitama | ✅ | 2025 |

| 千葉県 | Chiba | ✅ | 2015 |

| 東京都 | Tokyo | ✅ | 2005 |

| 神奈川県 | Kanagawa | ✅ | 2005 |

| 新潟県 | Niigata | ✅ | 2024 |

| 富山県 | Toyama | ✅ | 2024 |

| 石川県 | Ishikawa | ✅ | 2023 |

| 福井県 | Fukui | ✅ | 2025 |

| 山梨県 | Yamanashi | ✅ | 2024 |

| 長野県 | Nagano | ✅ | 2009 |

| 岐阜県 | Gifu | ✅ | 2005 |

| 静岡県 | Shizuoka | ✅ | 2023 |

| 愛知県 | Aichi | ✅ | 2009 |

| 三重県 | Mie | ✅ | 2019 |

| 滋賀県 | Shiga | ✅ | 2005 |

| 京都府 | Kyoto | ✅ | 2005 |

| 大阪府 | Osaka | ✅ | 2005 |

| 兵庫県 | Hyogo | ✅ | 2005 |

| 奈良県 | Nara | ✅ | 2005 |

| 和歌山県 | Wakayama | ✅ | 2019 |

| 鳥取県 | Tottori | ✅ | 2024 |

| 島根県 | Shimane | ✅ | 2024 |

| 岡山県 | Okayama | ✅ | 2024 |

| 広島県 | Hiroshima | ✅ | 2012 |

| 山口県 | Yamaguchi | ✅ | 2019 |

| 徳島県 | Tokushima | ✅ | 2025 |

| 香川県 | Kagawa | ✅ | 2020 |

| 愛媛県 | Ehime | ✅ | 2024 |

| 高知県 | Kochi | ✅ | 2025 |

| 福岡県 | Fukuoka | ✅ | 2009 |

| 佐賀県 | Saga | ✅ | 2025 |

| 長崎県 | Nagasaki | ✅ | 2024 |

| 熊本県 | Kumamoto | ✅ | 2023 |

| 大分県 | Oita | ✅ | 2024 |

| 宮崎県 | Miyazaki | ✅ | 2025 |

| 鹿児島県 | Kagoshima | ✅ | 2023 |

| 沖縄県 | Okinawa | ✅ | 2024 |

Working through this list, I was surprised by how many places I'd visited for a day trip or passed through without so much as staying the night. (Apparently 11 years passed between my first Kobe trip and my first overnight stay?) This exercise also made it clear to me that having a mission like my 2024 Nihonkai Tour is a great way to string together multiple short visits while still retaining a strong impression of each place.

Anyway, now I've definitely got some ideas for where I ought to visit in 2025! 🌄

Soapy Snake

One thing I love about Japan are all the obscure Metal Gear Solid spin-offs that we never saw stateside.

If this is losing, I don't want to win

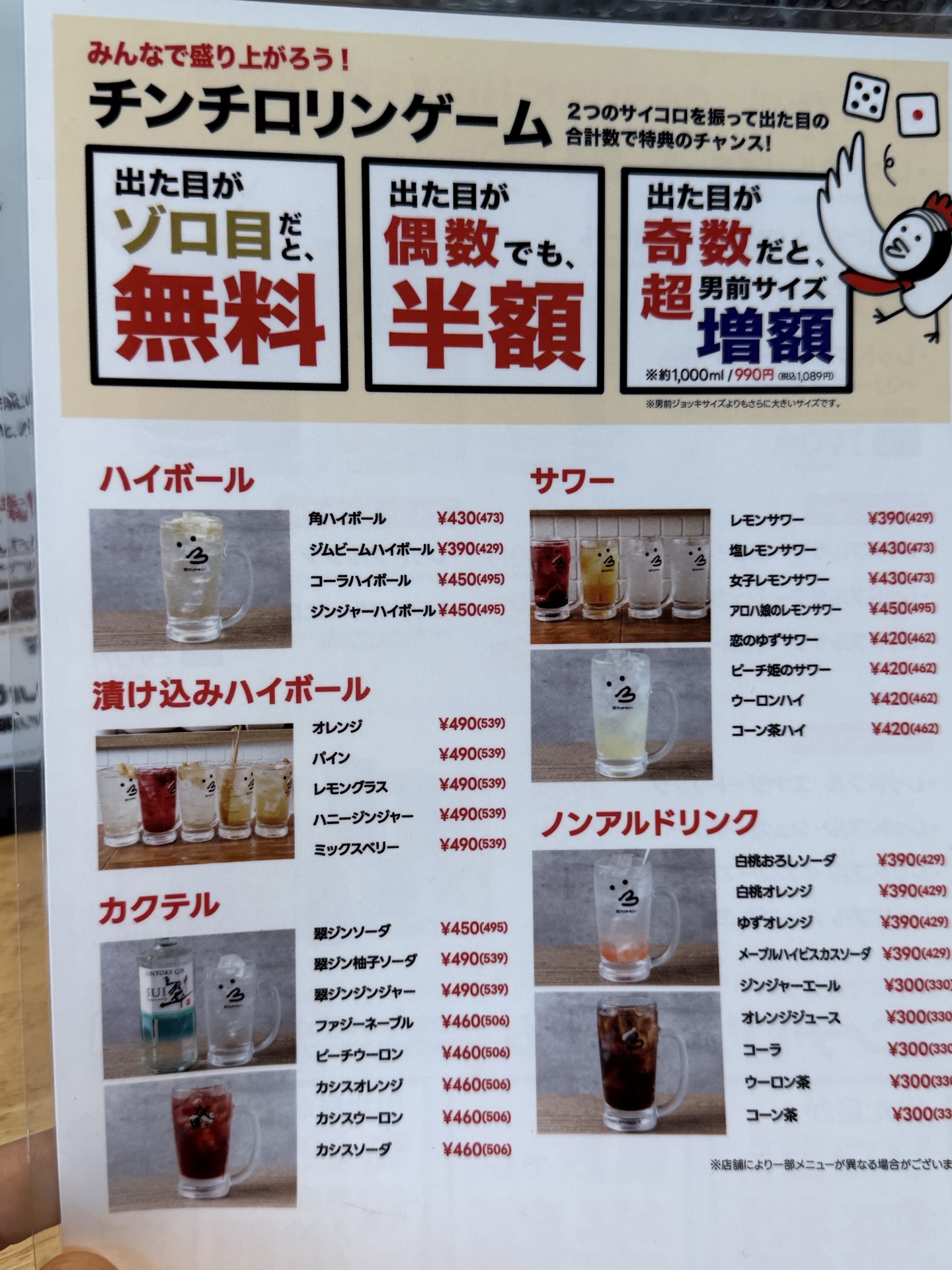

In Japan, it's common for bars to have a dice game with rules like:

- Snake eyes: free drink

- Even number: half off drink

- Odd number: double size, double price drink

I "lost" with both of these 1L whisky-fruit highballs. I sure don't feel like a loser, though.